- How to count how many files are in a folder or directory

- Counting files in Windows 8 and Windows 10

- Counting files in Microsoft Windows Vista and 7

- Counting files in Microsoft Windows XP

- Counting files in Microsoft command line (DOS)

- Counting files in Linux

- Command to list all files in a folder as well as sub-folders in windows

- 6 Answers 6

- What is better for performance — many files in one directory, or many subdirectories each with one file?

- 4 Answers 4

- Iterate all files in a directory using a ‘for’ loop

- 17 Answers 17

- How many files can I put in a directory?

- 21 Answers 21

- FAT32:

How to count how many files are in a folder or directory

Counting files in Windows 8 and Windows 10

- Open Windows Explorer.

- Browse to the folder containing the files you want to count. As shown in the picture below, in the right details pane, Windows displays how many items (files and folders) are in the current directory. If this pane is not shown, click View and then Details pane.

If hidden files are not shown, these files are not counted.

If any file or folder is highlighted, only the selected items are counted.

Use the search box in the top-right corner of the window to search for a specific type of file. For example, entering *.jpg would display only JPEG image files in the current directory and show you the count of files in the bottom corner of the window.

If you need to use wildcards or count a more specific type of file, use the steps below for counting files in the Windows command line.

Counting files in Microsoft Windows Vista and 7

- Open Windows Explorer.

- Navigate to the folder containing the files you want to count. In the bottom left portion of the window, it displays how many items (files and folders) are in the current directory.

If hidden files are not shown, these files are not counted.

If any file or folder is highlighted, only the selected items are counted.

Use the search box in the top-right corner of the window to search for a specific type of file. For example, entering *.jpg would display only JPEG image files in the current directory and show you the count of files in the bottom corner of the window.

If you need to use wildcards or count a more specific type of file, use the steps below for counting files in the Windows command line.

Counting files in Microsoft Windows XP

- Open Windows Explorer.

- Browse to the folder containing the files you want to count.

- Highlight one of the files in that folder and press the keyboard shortcutCtrl + A to highlight all files and folders in that folder. In the Explorer status bar, you’ll see how many files and folders are highlighted, as shown in the picture below.

If hidden files are not shown, these files are not selected.

You can also individually count a specific type of file, like only counting image files. Click the type column header to sort the files by type and then highlight the first file type you want to count. Once the first file is highlighted, hold down the Shift and while continuing to hold it down, press the down arrow to individually select files. For lots of files, you can also hold down the Shift and press the pgdn , to highlight files a page at a time.

If you need to use wildcards or count a more specific type of file, use the steps below for counting files in the Windows command line.

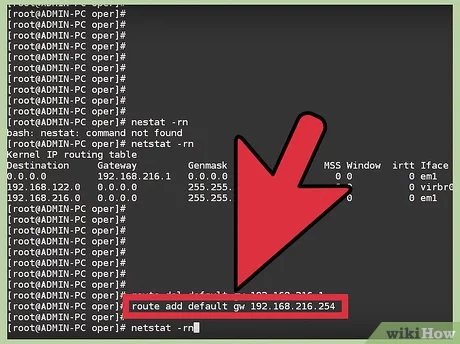

Counting files in Microsoft command line (DOS)

- Open the Windows command line.

- Move to the directory containing the files you want to count and use the dir command to list all files and directories in that directory. If you’re not familiar with how to navigate and use the command line, see: How to use the Windows command line (DOS).

As seen above, at the bottom of the dir output, you’ll see how many files and directories are listed in the current directory. In this example, there are 23 files and 7 directories on the desktop.

To count a specific type of file in the current directory, you can use wildcards. For example, typing dir *.mp3 lists all the MP3 audio files in the current directory.

To count all the files and directories in the current directory and subdirectories, type dir *.* /s at the prompt.

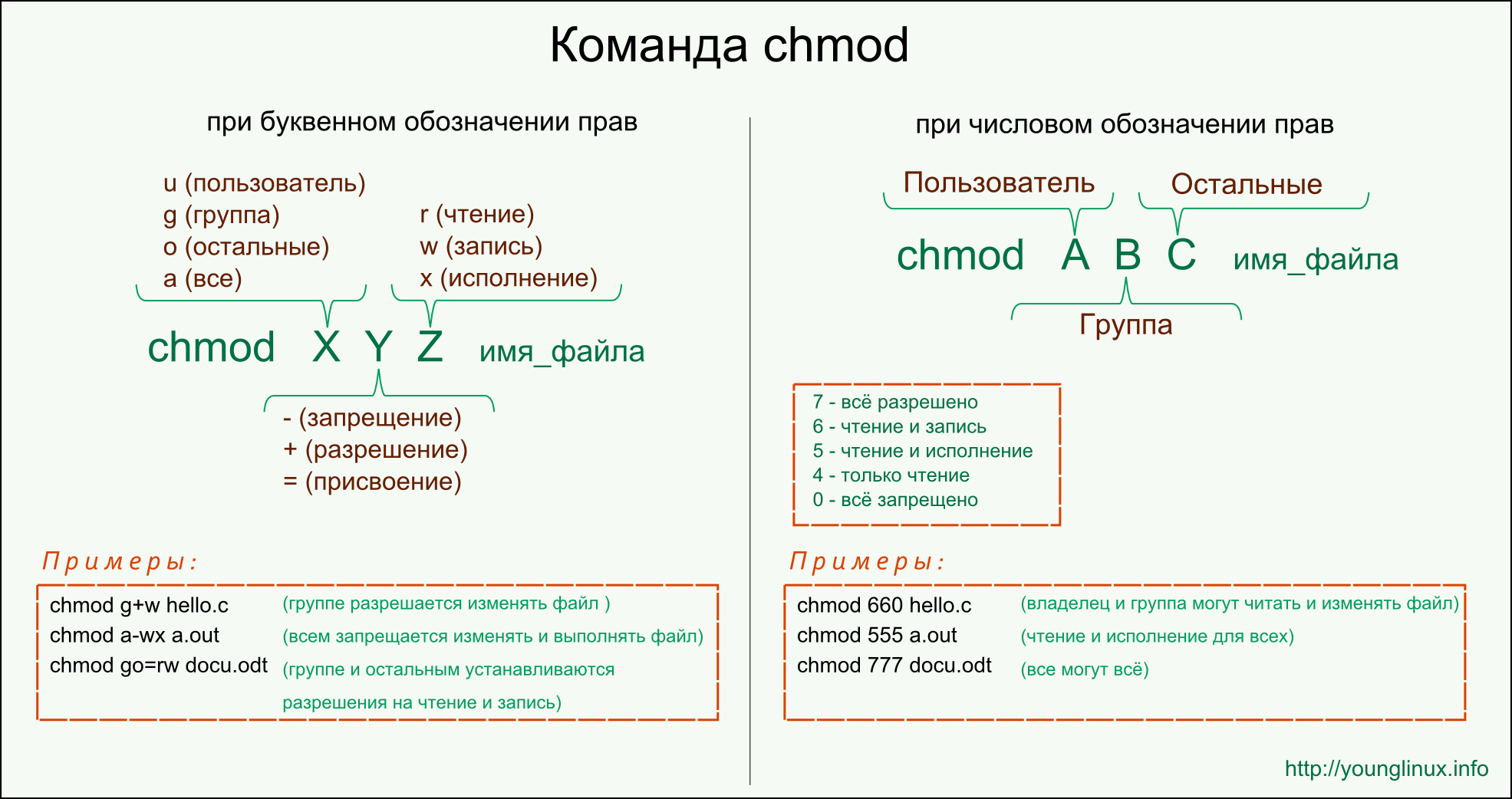

Counting files in Linux

To list the count of files in Linux, use the ls command piped into the wc command, as shown below.

To prevent any confusion, the above command reads ls

ls . This command list files in a bare format, and pipes the output into the wc command to count how many files are listed. When done properly, the terminal should return a single number indicating how many lines were counted and then return you to the prompt.

Keep in mind that this is also counting the ./ and ../ directories.

You can also add the grep command to find a more exact count of the files you want to count, as shown below.

In the example above, the command only counts files that begin with the letter «a». The regular expression ^a could be replaced with any valid grep command, or you could replace the letter «a» with another letter.

Command to list all files in a folder as well as sub-folders in windows

I tried searching for a command that could list all the file in a directory as well as subfolders using a command prompt command. I have read the help for «dir» command but coudn’t find what I was looking for. Please help me what command could get this.

6 Answers 6

The below post gives the solution for your scenario.

/S Displays files in specified directory and all subdirectories.

/B Uses bare format (no heading information or summary).

/O List by files in sorted order.

If you want to list folders and files like graphical directory tree, you should use tree command.

There are various options for display format or ordering.

Check example output.

Answering late. Hope it help someone.

An addition to the answer: when you do not want to list the folders, only the files in the subfolders, use /A-D switch like this:

An alternative to the above commands that is a little more bulletproof.

It can list all files irrespective of permissions or path length.

I have a slight issue with the use of C:\NULL which I have written about in my blog

But nevertheless it’s the most robust command I know.

If you simply need to get the basic snapshot of the files + folders. Follow these baby steps:

- Press Windows + R

- Press Enter

- Type cmd

- Press Enter

- Type dir -s

- Press Enter

Following commands we can use for Linux or Mac. For Windows we can use below on git bash.

List all files, first level folders, and their contents

List all first-level subdirectories and files

What is better for performance — many files in one directory, or many subdirectories each with one file?

While building web applications often we have files associated with database entries, eg: we have a user table and each category has a avatar field, which holds the path to associated image.

To make sure there are no conflicts in filenames we can either:

- rename files upon upload to ID.jpg ; the path would be then /user-avatars/ID.jpg

- or create a sub-directory for each entity, and leave the original filename intact; the path would be then /user-avatars/ID/original_filename.jpg

where ID is users ‘s unique ID number

Both perfectly valid from application logic’s point of view.

But which one would be better from filesystem performance point of view? We have to keep in mind that the number of category entries can be very high (milions).

Is there any limit to a number of sub-directories a directory can hold?

4 Answers 4

It’s going to depend on your file system, but I’m going to assume you’re talking about something simple like ext3, and you’re not running a distributed file system (some of which are quite good at this). In general, file systems perform poorly over a certain number of entries in a single directory, regardless of whether those entries are directories or files. So no matter whether if you’re creating one directory per image or one image in the root directory, you will run into scaling problems. If you look at this answer:

You’ll see that ext3 runs into limits at about 32K entries in a directory, far fewer than you’re proposing.

Iterate all files in a directory using a ‘for’ loop

How can I iterate over each file in a directory using a for loop?

And how could I tell if a certain entry is a directory or if it’s just a file?

17 Answers 17

This lists all the files (and only the files) in the current directory:

Also if you run that command in a batch file you need to double the % signs.

nxi ) . This thread can be really useful too: stackoverflow.com/questions/112055/…. – Sk8erPeter Dec 21 ’11 at 21:25

- . files in current dir: for %f in (.\*) do @echo %f

- . subdirs in current dir: for /D %s in (.\*) do @echo %s

- . files in current and all subdirs: for /R %f in (.\*) do @echo %f

- . subdirs in current and all subdirs: for /R /D %s in (.\*) do @echo %s

Unfortunately I did not find any way to iterate over files and subdirs at the same time.

Just use cygwin with its bash for much more functionality.

Apart from this: Did you notice, that the buildin help of MS Windows is a great resource for descriptions of cmd’s command line syntax?

To iterate over each file a for loop will work:

for %%f in (directory\path\*) do ( something_here )

In my case I also wanted the file content, name, etc.

This lead to a few issues and I thought my use case might help. Here is a loop that reads info from each ‘.txt’ file in a directory and allows you do do something with it (setx for instance).

There is a subtle difference between running FOR from the command line and from a batch file. In a batch file, you need to put two % characters in front of each variable reference.

From a command line:

From a batch file:

This for-loop will list all files in a directory.

«delims=» is useful to show long filenames with spaces in it.

‘/b» show only names, not size dates etc..

Some things to know about dir’s /a argument.

- Any use of «/a» would list everything, including hidden and system attributes.

- «/ad» would only show subdirectories, including hidden and system ones.

- «/a-d» argument eliminates content with ‘D’irectory attribute.

- «/a-d-h-s» will show everything, but entries with ‘D’irectory, ‘H’idden ‘S’ystem attribute.

If you use this on the commandline, remove a «%».

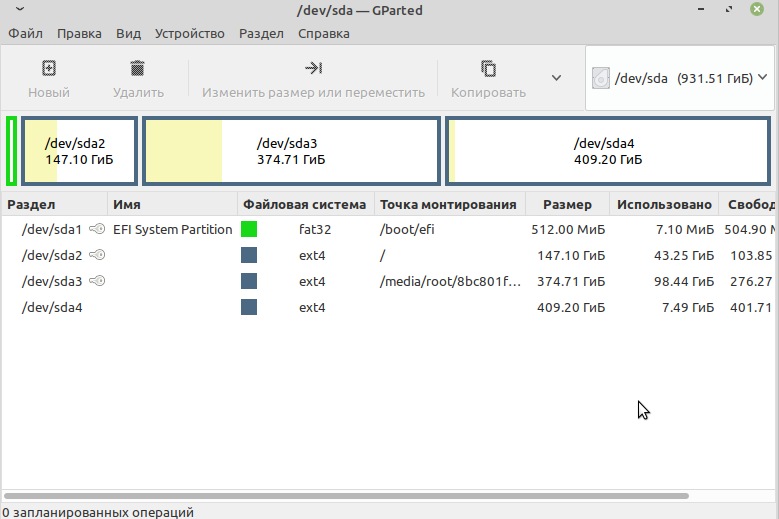

How many files can I put in a directory?

Does it matter how many files I keep in a single directory? If so, how many files in a directory is too many, and what are the impacts of having too many files? (This is on a Linux server.)

Background: I have a photo album website, and every image uploaded is renamed to an 8-hex-digit id (say, a58f375c.jpg). This is to avoid filename conflicts (if lots of «IMG0001.JPG» files are uploaded, for example). The original filename and any useful metadata is stored in a database. Right now, I have somewhere around 1500 files in the images directory. This makes listing the files in the directory (through FTP or SSH client) take a few seconds. But I can’t see that it has any effect other than that. In particular, there doesn’t seem to be any impact on how quickly an image file is served to the user.

I’ve thought about reducing the number of images by making 16 subdirectories: 0-9 and a-f. Then I’d move the images into the subdirectories based on what the first hex digit of the filename was. But I’m not sure that there’s any reason to do so except for the occasional listing of the directory through FTP/SSH.

21 Answers 21

FAT32:

- Maximum number of files: 268,173,300

- Maximum number of files per directory: 2 16 — 1 (65,535)

- Maximum file size: 2 GiB — 1 without LFS, 4 GiB — 1 with

- Maximum number of files: 2 32 — 1 (4,294,967,295)

- Maximum file size

- Implementation: 2 44 — 2 6 bytes (16 TiB — 64 KiB)

- Theoretical: 2 64 — 2 6 bytes (16 EiB — 64 KiB)

- Maximum volume size

- Implementation: 2 32 — 1 clusters (256 TiB — 64 KiB)

- Theoretical: 2 64 — 1 clusters (1 YiB — 64 KiB)

- Maximum number of files: 10 18

- Maximum number of files per directory:

1.3 × 10 20 (performance issues past 10,000)

- 16 GiB (block size of 1 KiB)

- 256 GiB (block size of 2 KiB)

- 2 TiB (block size of 4 KiB)

- 2 TiB (block size of 8 KiB)

- 4 TiB (block size of 1 KiB)

- 8 TiB (block size of 2 KiB)

- 16 TiB (block size of 4 KiB)

- 32 TiB (block size of 8 KiB)

- Maximum number of files: min(volumeSize / 2 13 , numberOfBlocks)

- Maximum file size: same as ext2

- Maximum volume size: same as ext2

- Maximum number of files: 2 32 — 1 (4,294,967,295)

- Maximum number of files per directory: unlimited

- Maximum file size: 2 44 — 1 bytes (16 TiB — 1)

- Maximum volume size: 2 48 — 1 bytes (256 TiB — 1)

I have had over 8 million files in a single ext3 directory. libc readdir() which is used by find , ls and most of the other methods discussed in this thread to list large directories.

The reason ls and find are slow in this case is that readdir() only reads 32K of directory entries at a time, so on slow disks it will require many many reads to list a directory. There is a solution to this speed problem. I wrote a pretty detailed article about it at: http://www.olark.com/spw/2011/08/you-can-list-a-directory-with-8-million-files-but-not-with-ls/

The key take away is: use getdents() directly — http://www.kernel.org/doc/man-pages/online/pages/man2/getdents.2.html rather than anything that’s based on libc readdir() so you can specify the buffer size when reading directory entries from disk.

I have a directory with 88,914 files in it. Like yourself this is used for storing thumbnails and on a Linux server.

Listed files via FTP or a php function is slow yes, but there is also a performance hit on displaying the file. e.g. www.website.com/thumbdir/gh3hg4h2b4h234b3h2.jpg has a wait time of 200-400 ms. As a comparison on another site I have with a around 100 files in a directory the image is displayed after just

40ms of waiting.

I’ve given this answer as most people have just written how directory search functions will perform, which you won’t be using on a thumb folder — just statically displaying files, but will be interested in performance of how the files can actually be used.

It depends a bit on the specific filesystem in use on the Linux server. Nowadays the default is ext3 with dir_index, which makes searching large directories very fast.

So speed shouldn’t be an issue, other than the one you already noted, which is that listings will take longer.

There is a limit to the total number of files in one directory. I seem to remember it definitely working up to 32000 files.

Keep in mind that on Linux if you have a directory with too many files, the shell may not be able to expand wildcards. I have this issue with a photo album hosted on Linux. It stores all the resized images in a single directory. While the file system can handle many files, the shell can’t. Example:

400,000 files in a directory. I was able to trim the older files with «find» to the point where I could «rm» with a wildcard. – PJ Brunet Mar 15 ’13 at 3:47

I’m working on a similar problem right now. We have a hierarchichal directory structure and use image ids as filenames. For example, an image with id=1234567 is placed in

using last 4 digits to determine where the file goes.

With a few thousand images, you could use a one-level hierarchy. Our sysadmin suggested no more than couple of thousand files in any given directory (ext3) for efficiency / backup / whatever other reasons he had in mind.

For what it’s worth, I just created a directory on an ext4 file system with 1,000,000 files in it, then randomly accessed those files through a web server. I didn’t notice any premium on accessing those over (say) only having 10 files there.

This is radically different from my experience doing this on ntfs a few years back.

The biggest issue I’ve run into is on a 32-bit system. Once you pass a certain number, tools like ‘ls’ stop working.

Trying to do anything with that directory once you pass that barrier becomes a huge problem.

I’ve been having the same issue. Trying to store millions of files in a Ubuntu server in ext4. Ended running my own benchmarks. Found out that flat directory performs way better while being way simpler to use:

If the time involved in implementing a directory partitioning scheme is minimal, I am in favor of it. The first time you have to debug a problem that involves manipulating a 10000-file directory via the console you will understand.

As an example, F-Spot stores photo files as YYYY\MM\DD\filename.ext, which means the largest directory I have had to deal with while manually manipulating my

20000-photo collection is about 800 files. This also makes the files more easily browsable from a third party application. Never assume that your software is the only thing that will be accessing your software’s files.

It absolutely depends on the filesystem. Many modern filesystems use decent data structures to store the contents of directories, but older filesystems often just added the entries to a list, so retrieving a file was an O(n) operation.

Even if the filesystem does it right, it’s still absolutely possible for programs that list directory contents to mess up and do an O(n^2) sort, so to be on the safe side, I’d always limit the number of files per directory to no more than 500.

It really depends on the filesystem used, and also some flags.

For example, ext3 can have many thousands of files; but after a couple of thousands, it used to be very slow. Mostly when listing a directory, but also when opening a single file. A few years ago, it gained the ‘htree’ option, that dramatically shortened the time needed to get an inode given a filename.

Personally, I use subdirectories to keep most levels under a thousand or so items. In your case, I’d create 256 directories, with the two last hex digits of the ID. Use the last and not the first digits, so you get the load balanced.

ext3 does in fact have directory size limits, and they depend on the block size of the filesystem. There isn’t a per-directory «max number» of files, but a per-directory «max number of blocks used to store file entries». Specifically, the size of the directory itself can’t grow beyond a b-tree of height 3, and the fanout of the tree depends on the block size. See this link for some details.

I was bitten by this recently on a filesystem formatted with 2K blocks, which was inexplicably getting directory-full kernel messages warning: ext3_dx_add_entry: Directory index full! when I was copying from another ext3 filesystem. In my case, a directory with a mere 480,000 files was unable to be copied to the destination.

The question comes down to what you’re going to do with the files.

Under Windows, any directory with more than 2k files tends to open slowly for me in Explorer. If they’re all image files, more than 1k tend to open very slowly in thumbnail view.

At one time, the system-imposed limit was 32,767. It’s higher now, but even that is way too many files to handle at one time under most circumstances.

What most of the answers above fail to show is that there is no «One Size Fits All» answer to the original question.

In today’s environment we have a large conglomerate of different hardware and software — some is 32 bit, some is 64 bit, some is cutting edge and some is tried and true — reliable and never changing. Added to that is a variety of older and newer hardware, older and newer OSes, different vendors (Windows, Unixes, Apple, etc.) and a myriad of utilities and servers that go along. As hardware has improved and software is converted to 64 bit compatibility, there has necessarily been considerable delay in getting all the pieces of this very large and complex world to play nicely with the rapid pace of changes.

IMHO there is no one way to fix a problem. The solution is to research the possibilities and then by trial and error find what works best for your particular needs. Each user must determine what works for their system rather than using a cookie cutter approach.

I for example have a media server with a few very large files. The result is only about 400 files filling a 3 TB drive. Only 1% of the inodes are used but 95% of the total space is used. Someone else, with a lot of smaller files may run out of inodes before they come near to filling the space. (On ext4 filesystems as a rule of thumb, 1 inode is used for each file/directory.) While theoretically the total number of files that may be contained within a directory is nearly infinite, practicality determines that the overall usage determine realistic units, not just filesystem capabilities.

I hope that all the different answers above have promoted thought and problem solving rather than presenting an insurmountable barrier to progress.