5 useful tools to detect memory leaks with examples

Table of Contents

In this tutorial I will share different methods and tools to detect and find memory leaks with different processes in Linux. As a developer we often face scenarios when proess such as httpd apache, java starts consuming high amount of memory leading to OOM (Out Of memory) situations.

So it is always healthy to keep monitoring the memory usage of critical process. I work for an application which is memory intensive so it is my job to make sure other processes are not eating up the memory unnecessarily. In this process I use different tools in real time environments to detect memory leaks and then report it to the responsible developers.

What is Memory Leak?

- Memory is allocated on demand—using malloc() or one of its variants—and memory is freed when it’s no longer needed.

- A memory leak occurs when memory is allocated but not freed when it is no longer needed.

- Leaks can obviously be caused by a malloc() without a corresponding free() , but leaks can also be inadvertently caused if a pointer to dynamically allocated memory is deleted, lost, or overwritten.

- Buffer overruns—caused by writing past the end of a block of allocated memory—frequently corrupt memory.

- Memory leakage is by no means unique to embedded systems, but it becomes an issue partly because targets don’t have much memory in the first place and partly because they often run for long periods of time without rebooting, allowing the leaks to become a large puddle.

- Regardless of the root cause, memory management errors can have unexpected, even devastating effects on application and system behavior.

- With dwindling available memory, processes and entire systems can grind to a halt, while corrupted memory often leads to spurious crashes.

Before you go ahead I would recommend you to also read about Linux memory management so that you are familiar with the different terminologies used in Linux kernel in terms of memory.

1. Memwatch

- MEMWATCH, written by Johan Lindh, is an open-source memory error-detection tool for C.

- It can be downloaded from https://sourceforge.net/projects/memwatch

- By simply adding a header file to your code and defining MEMWATCH in your gcc command, you can track memory leaks and corruptions in a program.

- MEMWATCH supports ANSI C; provides a log of the results; and detects double frees, erroneous frees, unfreed memory, overflow and underflow, and so on.

I have downloaded and extracted memwatch on my Linux server as you can check in the screenshot:

Next before we compile the software, we must comment the below line from test.c which is a part of memwatch archive.

Next compile the software:

Next we will create a dummy C program memory.c and add the memwatch.h include on line 3 so that MEMWATCH can be enabled. Also, two compile-time flags -DMEMWATCH and -DMW_STDIO need to be added to the compile statement for each source file in the program.

The code shown in allocates two 512-byte blocks of memory (lines 10 and 11), and then the pointer to the first block is set to the second block (line 13). As a result, the address of the second block is lost, and a memory leak occurs.

Now compile the memwatch.c file, which is part of the MEMWATCH package with the sample source code ( memory1.c ). The following is a sample makefile for building memory1.c . memory1 is the executable produced by this makefile :

Next we execute the program memory1 which captures two memory-management anomalies

MEMWATCH creates a log called memwatch.log . as you can see below, which is created by running the memory1 program.

MEMWATCH tells you which line has the problem. For a free of an already freed pointer, it identifies that condition. The same goes for unfreed memory. The section at the end of the log displays statistics, including how much memory was leaked, how much was used, and the total amount allocated.

In the above figure you can see that the memory management errors occur on line 15, which shows that there is a double free of memory. The next error is a memory leak of 512 bytes, and that memory is allocated in line 11.

2. Valgrind

- Valgrind is an Intel x86-specific tool that emulates an x86-class CPU to watch all memory accesses directly and analyze data flow

- One advantage is that you don’t have to recompile the programs and libraries that you want to check, although it works better if they have been compiled with the -g option so that they include debug symbol tables.

- It works by running the program in an emulated environment and trapping execution at various points.

- This leads to the big downside of Valgrind, which is that the program runs at a fraction of normal speed, which makes it less useful in testing anything with real-time constraints.

Valgrind can detect problems such as:

- Use of uninitialized memory

- Reading and writing memory after it has been freed

- Reading and writing from memory past the allocated size

- Reading and writing inappropriate areas on the stack

- Memory leaks

- Passing of uninitialized and/or unaddressable memory

- Mismatched use of malloc /new/new() versus free/delete/delete()

Valgrind is available in most Linux distributions so you can directly go ahead and install the tool

Next you can execute valgrind with the process for which you wish to check for memory leak. For example I wish to check memory leak for amsHelper process which is executed with -f option. Press Ctrl+C to stop monitoring

To save the output to a log file and to collect more details on the leak use —leak-check-full along with —log-file=/path/og/log/file . Press Ctrl+C to stop monitoring

Now you can check the content of /tmp/mem-leak-amsHelper.log . When it finds a problem, the Valgrind output has the following format:

3. Memleax

One of the drawbacks of Valgrind is that you cannot check memory leak of an existing process which is where memleax comes for the rescue. I have had instances where the memory leak was very sporadic for amsHelper process so at times when I see this process reserving memory I wanted to debug that specific PID instead of creating a new one for analysis.

memleax debugs memory leak of a running process by attaching it. It hooks the target process’s invocation of memory allocation and free, and reports the memory blocks which live long enough as memory leak, in real time. The default expire threshold is 10 seconds, however you should always set it by -e option according to your scenarios.

You can download memleax from the official github repository

Next memleax expects few dependencies which you must install before installing the memleax rpm

So I have manually copied these rpms from my official repository as this is a private network I could not use yum or dnf

Now since I have installed both the dependencies, I will go ahead and install memleax rpm:

Next you need the PID of the process which you wish to monitor. You can get the PID of your process from ps -ef output

Now here we wish to check the memory leak of 45256 PID

You may get output similar to above in case of a memory leak in the application process. Press Ctrl+C to stop monitoring

4. Collecting core dump

It helps for the developer at times we can share the core dump of the process which is leaking memory. In Red Hat/CentOS you can collect core dump using abrt and abrt-addon-ccpp

Before you start make sure the system is set up to generate application cores by removing the core limits:

Next install these rpms in your environment

Ensure the ccpp hooks are installed:

Ensure that this service is up and the ccpp hook to capture core dumps is enabled:

Enable the hooks

To get a list of crashes on the command line, issue the following command:

But since there are no crash the output would be empty. Next get the PID for which you wish to collect core dump, here for example I will collect for PID 45256

Next we have to send SIGABRT i.e. -6 kill signal to this PID to generate the core dump

Next you can check the list of available dumps, now you can see a new entry for this PID. This dump will contain all the information required to analyse the leak for this process

5. How to identify memory leak using default Linux tools

We discussed about third party tools which can be used to detect memory leak with more information in the code which can help the developer analyse and fix the bug. But if our requirement is just to look out for process which is reserving memory for no reason then we will have to rely on system tools such as sar , vmstat , pmap , meminfo etc

So let’s learn about using these tools to identify a possible memory leak scenario. Before you start you must be familiar with below areas

- How to check the actual memory consumed by individual process

- How much memory reservation is normal for your application process

If you have answers to above questions then it will be easier to analyse the problem.

For example in my case I know the memory usage of amsHelper should not be more than few MB but in case of memory leak the memory reservation goes way higher than few MB. if you have read my earlier article where I explained different tools to check actual memory usage, you would know that Pss gives us an actual idea of the memory consumed by the process.

pmap would give you more detailed output of memory consumed by individual address segments and libraries of the process as you can see below

Alternatively you can get the same information with more details using smaps of the respective process. Here I have written a small script to combine the memory and get the total but you can also remove the pipe and break down the command to get more details

So you can put a cron job or create a daemon to timely monitor the memory consumption of your application using these tools to figure out if they are consuming too much memory over time.

Conclusion

In this tutorial I shared different commands, tools and methods to detect and monitor memory leak across different types of applications such as C or C++ programs, Linux applications etc. The selection of tools would vary based on your requirement. There are many other tools such as YAMD, Electric fence, gdb core dump etc which can help you capture memory leak.

Lastly I hope the steps from the article to check and monitor memory leak on Linux was helpful. So, let me know your suggestions and feedback using the comment section.

References

Related Posts

Didn’t find what you were looking for? Perform a quick search across GoLinuxCloud

If my articles on GoLinuxCloud has helped you, kindly consider buying me a coffee as a token of appreciation.

For any other feedbacks or questions you can either use the comments section or contact me form.

Thank You for your support!!

Источник

Is this a memory leak in Linux Mono application?

I have been struggling to determine if what I am seeing is a memory leak in my Debian Wheezy Mono-Sgen 2.10.8.1-8 embedded application. The system has 512MB of RAM. Swap is disabled. I have been studying to try to understand how Linux manages process memory to conclude if what I am seeing is in fact a memory leak somewhere in Mono-Sgen. I am pretty sure it is not my application since I have profiled it numerous time after weeks of constant run time, and the GC memory always falls back to the baseline of the application. No objects are leaking from the viewpoint of my application. This does not mean internally Mono-Sgen is not leaking, but I have not determined this, and it may not be.

I have attempted to cap my Mono-Sgen heap since the default for mono for the large heap is 512MB, and since this is all my system has, I assumed I needed to cap it to prevent OOM from Linux. My configuration for Mono-Sgen is below:

From my understanding I am using the mark-and-sweep fixed major heap with 32MB fixed size, default nursery size of 4MB and Mono-Sgen will perform a copying collection on the major heap if any of the major heap allocation buckets fall below being 75% utilized to prevent fragmentation. I bumped the default of 66% up.

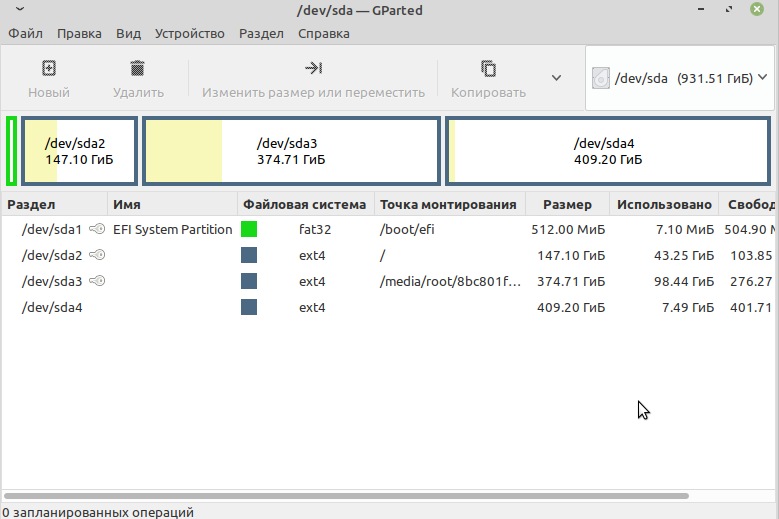

My device has currently been up for a little over 6 days since a power outage caused a reset. When my application first starts up, I wait about 10 mins to make sure it is fully initialized, then I take a snap shot of the /proc/PID/status file to get a baseline of its memory usage. Today, I took another snap shop to see where I am, and as always, my virtual, resident, and data for this instance of the Mono-Sgen process has again grown. It has yet to breach the high water marks that occurred during initialization, but last time I did this test it did. What I have not been able to do and which I am trying to accomplish is to let it run to the point that it exhausts all of the physical memory of the system. I need to know if this is in fact a memory leak or if at some point Linux is going to reclaim some of the pages my process has allocated.

One thing I have notice is that even know I have no swap, the resident size of my Mono-Sgen process is always about 30MB less then the data count. From what I understand the data count is the amount of heap allocation, the resident size is what is actually in physical memory, the virtual is what has been allocated, not necessarily used.

My assumption is that Linux is just being Linux and not wasting time or memory unless it has to. I assume since the system is very lightly loaded, Linux has 0 memory pressure to do anything to reclaim memory and just lest Mono-Sgen keep on allocating and growing the heap, and my hope is when there is some actual memory pressure, Linux is going to step in and reclaim pages that are not really being used.

I have read that Linux will not shrink the allocated memory size of a process when free is called on previous allocated memory. I do not understand why, unless Linux being Linux, it will only do it when it has to. But my fear is how long I need to wait until this happens.

Is this a memory leak or will Linux reclaim the page difference seen between the resident and data size of this process when memory pressure starts to kick in? I have searched and read everything I can get my hands on about the subject and I am not finding the answer I am looking for and really do not want to wait a month to find out if my application is going to bounce because of OOM killer. I will anyway 🙂 But I would like to know before hand.

I have looked into potential memory leaks with Mono-Sgen 2.10.8.1-8, but for what I am doing (using a lot of process.start() calls to native Linux applications) most of the type of bugs that would be hurting me are not in this release. I tried to update to Jessie’s version of Mono-Sgen (3.2.8 I think) but it was crashing on my system so I reverted back to the stable Mono-Sgen 2.10.8.1-8 version out of more unknown fear.

Attached are numerous snap shots of the typical memory information, with most my attention being focused on what is returned by /proc/PID/status.

Any information will be greatly appreciated as always and my hope is I just do not understand how Linux reclaims memory on a lightly loaded system.

Источник