- How to Determine and Change File Character Encoding of Text Files in Linux Systems

- Part 1: Detect a File’s Encoding using file Linux command

- Part 2: Change a File’s Encoding using iconv Linux command

- HowTo: Check and Change File Encoding In Linux

- Check a File’s Encoding

- Change a File’s Encoding

- List All Charsets

- 8 Replies to “HowTo: Check and Change File Encoding In Linux”

- How can I test the encoding of a text file. Is it valid, and what is it?

- 4 Answers 4

- enca(1) — Linux man page

- Synopsis

- Introduction and Examples

- Output type selectors

- Guessing parameters

- Conversion parameters

- General options

- Listings

- Conversion

- Built-in converter

- Librecode converter

- Iconv converter

- External converter

- Default target charset

- Reversibility notes

- Performance notes

- Encodings

- Charsets

- Surfaces

- About charsets, encodings and surfaces

- Languages

- Features

- Environment

- Diagnostics

- Security

- See Also

- Known Bugs

- Trivia

- Authors

- Acknowledgements

- Copyright

How to Determine and Change File Character Encoding of Text Files in Linux Systems

Posted by: Mohammed Semari | Published: January 17, 2017| Updated: February 26, 2017

How many times did you want to find and detect the encoding of a text files in Linux systems? or How many times did you try to watch a movie and it’s subtitles .srt showed in unreadable shapes “characters” ?

Sure many times you tried/needed to know/change the encoding of text files in Linux systems.

All this because you are using a wrong encoding format for your text files. The solution for this is very simple Just knowing the text files encoding will end your problems. You can either “for example” set your media player to use the correct encoding for your subtitles OR YOU CAN CHANGE THE ENCODING OF YOUR TEXT FILES TO A GLOBAL ACCEPTED ENCODING “UTF-8 FOR EXAMPLE”

This post is divided into two parts. In part 1: I’ll show you how to find and detect the text files encoding in Linux systems using Linux file command available by default in all Linux distributions. In part 2: I’ll show you how to change the encoding of the text files using iconv Linux command between CP1256 (Windows-1256, Cyrillic), UTF-8, ISO-8859-1 and ASCII character sets.

Part 1: Detect a File’s Encoding using file Linux command

The file command makes “best-guesses” about the encoding. Use the following command to determine what character encoding is used by a file :

| Option | Description |

|---|---|

| -b, —brief | Don’t print filename (brief mode) |

| -i, —mime | Print filetype and encoding |

Example 1 : Detect the encoding of the file “storks.srt”

As you see, “storks.srt” file is encoded with iso-8859-1

Example 2 : Detect the encoding of the file “The.Girl.on.the.Train.2016.1080p.WEB-DL.DD5.1.H264-FGT.srt”

Here’s the “The.Girl.on.the.Train.2016.1080p.WEB-DL.DD5.1.H264-FGT.srt” file is utf-8 encoded.

Finally, file command is perfect for telling you what exactly the encoding of a text file. You can use it to detect if your text file is encoded with UTF-8, WINDOWS-1256, ISO-8859-6, GEORGIAN-ACADEMY, etc…

Part 2: Change a File’s Encoding using iconv Linux command

To use iconv Linux command you need to know the encoding of the text file you need to change it. Use the following syntax to convert the encoding of a file :

| Option | Description |

|---|---|

| -f, —from-code | Convert characters from encoding |

| -t, —to-code | Convert characters to encoding |

Example 1: Convert a file’s encoding from iso-8859-1 to UTF-8 and save it to New_storks.srt

Here’s the New_storks.srt is UTF-8 encoded.

Example 2: Convert a file’s encoding from cp1256 to UTF-8 and save it to output.txt

Here’s the output.txt is UTF-8 encoded.

Example 3: Convert a file’s encoding from ASCII to UTF-8 and save it to output.txt

Here’s the output.txt is UTF-8 encoded.

Example 4: Convert a file’s encoding from UTF-8 to ASCII

| Option | Description |

|---|---|

| -c | Omit invalid characters from output |

Finally, to list all the coded character sets known run -l option with iconv as follow:

| Option | Description |

|---|---|

| -l, —list | List known coded character sets |

Here’s the output of the above command:

Finally, I hope this article is useful for you.

Источник

HowTo: Check and Change File Encoding In Linux

The Linux administrators that work with web hosting know how is it important to keep correct character encoding of the html documents.

From the following article you’ll learn how to check a file’s encoding from the command-line in Linux.

You will also find the best solution to convert text files between different charsets.

I’ll also show the most common examples of how to convert a file’s encoding between CP1251 (Windows-1251, Cyrillic), UTF-8 , ISO-8859-1 and ASCII charsets.

Cool Tip: Want see your native language in the Linux terminal? Simply change locale! Read more →

Check a File’s Encoding

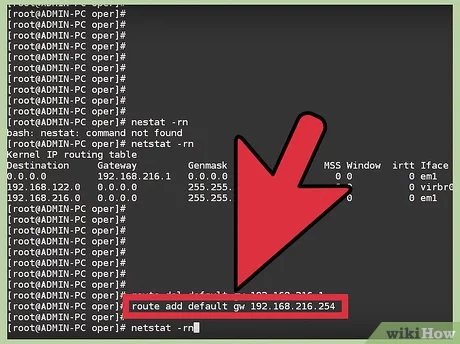

Use the following command to check what encoding is used in a file:

| Option | Description |

|---|---|

| -b , —brief | Don’t print filename (brief mode) |

| -i , —mime | Print filetype and encoding |

Check the encoding of the file in.txt :

Change a File’s Encoding

Use the following command to change the encoding of a file:

| Option | Description |

|---|---|

| -f , —from-code | Convert a file’s encoding from charset |

| -t , —to-code | Convert a file’s encoding to charset |

| -o , —output | Specify output file (instead of stdout) |

Change a file’s encoding from CP1251 (Windows-1251, Cyrillic) charset to UTF-8 :

Change a file’s encoding from ISO-8859-1 charset to and save it to out.txt :

Change a file’s encoding from ASCII to UTF-8 :

Change a file’s encoding from UTF-8 charset to ASCII :

Illegal input sequence at position: As UTF-8 can contain characters that can’t be encoded with ASCII, the iconv will generate the error message “illegal input sequence at position” unless you tell it to strip all non-ASCII characters using the -c option.

| Option | Description |

|---|---|

| -c | Omit invalid characters from the output |

You can lose characters: Note that if you use the iconv with the -c option, nonconvertible characters will be lost.

This concerns in particular Windows machines with Cyrillic.

You have copied some file from Windows to Linux, but when you open it in Linux, you see “Êàêèå-òî êðàêîçÿáðû” – WTF!?

Don’t panic – such strings can be easily converted from CP1251 (Windows-1251, Cyrillic) charset to UTF-8 with:

List All Charsets

List all the known charsets in your Linux system:

| Option | Description |

|---|---|

| -l , —list | List known charsets |

8 Replies to “HowTo: Check and Change File Encoding In Linux”

Thank you very much. Your reciept helped a lot!

I am running Linux Mint 18.1 with Cinnamon 3.2. I had some Czech characters in file names (e.g: Pešek.m4a). The š appeared as a ? and the filename included a warning about invalid encoding. I used convmv to convert the filenames (from iso-8859-1) to utf-8, but the š now appears as a different character (a square with 009A in it. I tried the file command you recommended, and got the answer that the charset was binary. How do I solve this? I would like to have the filenames include the correct utf-8 characters.

Thanks for your help–

Вообще-то есть 2 утилиты для определения кодировки. Первая этo file. Она хорошо определяет тип файла и юникодовские кодировки… А вот с ASCII кодировками глючит. Например все они выдаются как буд-то они iso-8859-1. Но это не так. Тут надо воспользоваться другой утилитой enca. Она в отличие от file очень хорошо работает с ASCII кодировками. Я не знаю такой утилиты, чтобы она одновременно хорошо работала и с ASCII и с юникодом… Но можно совместить их, написав свою. Это да. Кстати еnca может и перекодировать. Но я вам этого не советую. Потому что лучше всего это iconv. Он отлично работает со всеми типами кодировок и даже намного больше, с различными вариациями, включая BCD кодировки типа EBCDIC(это кодировки 70-80 годов, ещё до ДОСа…) Хотя тех систем давно нет, а файлов полно… Я не знаю ничего лучше для перекодировки чем iconv. Я думаю всё таки что file не определяет ASCII кодировки потому что не зарегистрированы соответствующие mime-types для этих кодировок… Это плохо. Потому что лучшие кодировки это ASCII.

Для этого есть много причин. И я не знаю ни одной разумной почему надо пользоваться юникодовскими кроме фразы “США так решило…” И навязывают всем их, особенно эту utf-8. Это худшее для кодирования текста что когда либо было! А главная причина чтобы не пользоваться utf-8, а пользоваться ASCII это то, что пользоваться чем-то иным никогда не имеет смысла. Даже в вебе. Хотите значки? Используйте символьные шрифты, их полно. Не вижу проблем… Почему я должен делать для корейцев, арабов или китайцев? Не хочу. Мне всегда хватало русского, в крайнем случае английского. Зачем мне ихние поганые языки и кодировки? Теперь про ASCII. KOI8-R это вычурная кодировка. Там русские буквы идут не по порядку. Нормальных только 2: это CP1251 и DOS866. В зависимости от того для чего. Если для графики, то безусловно CP1251. А если для полноценной псевдографики, то лучше DOS866 не придумали. Они не идеальны, но почти… Плохость utf-8 для русских текстов ещё и в том, что там каждая буква занимает 2 байта. Там ещё такая фишка как во всех юникодах это indian… Это то, в каком порядке идут байты, вначале младший а потом старший(как в памяти по адресам, или буквы в словах при написании) или наоборот, как разряды в числе, вначале старшие а потом младшие. А если символ 3-х, 4-х и боле байтов(до 16-ти в utf-8) то там кол-во заморочек растёт в геометрической прогрессии! Он ещё и тормозит, ибо каждый раз надо вычислять длину символа по довольно сложному алгоритму! А ведь нам ничего этого не надо! Причём заметьте, ихние англицкие буквы идут по порядку, ничего не пропущено и все помещаются в 1-м байте… Т.е. это искусственно придуманые штуки не для избранных америкосов. Их это вообще не волнует. Они разом обошли все проблемы записав свой алфавит в начало таблицы! Но кто им дал такое право? А все остальные загнали куда подальше… Особенно китайцев! Но если использовать CP1251, то она работает очень быстро, без тормозов и заморочек! Так же как и английские буквы…

а вот дальше бардак. Правда сейчас нам приходится пользоваться этим utf-8, Нет систем в которых бы системная кодировка была бы ASCII. Уже перестали делать. И все файлы системные именно в uft-8. А если ты хочешь ASCII, то тебе придётся всё время перекодировать. Раньше так не надо было делать. Надеюсь наши всё же сделают свою систему без ихних штатовких костылей…

Уважаемый Анатолий, огромнейшее Вам спасибо за упоминание enca. очень помогла она мне сегодня. Хотя пост Ваш рассистский и странный, но, видимо, сильно наболело.

Источник

How can I test the encoding of a text file. Is it valid, and what is it?

I have several .htm files which open in Gedit without any warning/error, but when I open these same files in Jedit , it warns me of invalid UTF-8 encoding.

The HTML meta tag states «charset=ISO-8859-1». Jedit allows a List of fallback encodings and a List of encoding auto-detectors (currently «BOM XML-PI»), so my immediate problem has been resolved. But this got me thinking about: What if the meta data wasn’t there?

When the encoding information is just not available, is there a CLI program which can make a «best-guess» of which encodings may apply?

And, although it is a slightly different issue; is there a CLI program which tests the validity of a known encoding?

4 Answers 4

The file command makes «best-guesses» about the encoding. Use the -i parameter to force file to print information about the encoding.

Here is how I created the files:

Nowadays everything is utf-8. But convince yourself:

Convert to the other encodings:

Check the hex dump:

Create something «invalid» by mixing all three:

The file command has no idea of «valid» or «invalid». It just sees some bytes and tries to guess what the encoding might be. As humans we might be able to recognize that a file is a text file with some umlauts in a «wrong» encoding. But as a computer it would need some sort of artificial intelligence.

One might argue that the heuristics of file is some sort of artificial intelligence. Yet, even if it is, it is a very limited one.

Here is more information about the file command: http://www.linfo.org/file_command.html

It isn’t always possible to find out for sure what the encoding of a text file is. For example, the byte sequence \303\275 ( c3 bd in hexadecimal) could be ý in UTF-8, or ý in latin1, or Ă˝ in latin2, or 羸 in BIG-5, and so on.

Some encodings have invalid byte sequences, so it’s possible to rule them out for sure. This is true in particular of UTF-8; most texts in most 8-bit encodings are not valid UTF-8. You can test for valid UTF-8 with isutf8 from moreutils or with iconv -f utf-8 -t utf-8 >/dev/null , amongst others.

There are tools that try to guess the encoding of a text file. They can make mistakes, but they often work in practice as long as you don’t deliberately try to fool them.

- file

- Perl Encode::Guess (part of the standard distribution) tries successive encodings on a byte string and returns the first encoding in which the string is valid text.

- Enca is an encoding guesser and converter. You can give it a language name and text that you presume is in that language (the supported languages are mostly East European languages), and it tries to guess the encoding.

If there is metadata (HTML/XML charset= , TeX \inputenc , emacs -*-coding-*- , …) in the file, advanced editors like Emacs or Vim are often able to parse that metadata. That’s not easy to automate from the command line though.

Источник

enca(1) — Linux man page

enca — detect and convert encoding of text files

Synopsis

Introduction and Examples

If you are lucky enough, the only two things you will ever need to know are: command

will tell you which encoding file FILE uses (without changing it), and

will convert file FILE to your locale native encoding. To convert the file to some other encoding use the -x option (see -x entry in section OPTIONS and sections CONVERSION and ENCODINGS for details).

Both work with multiple files and standard input (output) too. E.g.

enca -x latin2 -c, —auto-convert Equivalent to calling Enca as enconv.

If no output type selector is specified, detect file encodings, guess your preferred charset from locales, and convert files to it (only available with +target-charset-auto feature). -g, —guess Equivalent to calling Enca as enca.

If no output type selector is specified, detect file encodings and report them.

Output type selectors

select what action Enca will take when it determines the encoding; most of them just choose between different names, formats and conventions how encodings can be printed, but one of them (-x) is special: it tells Enca to recode files to some other encoding ENC. These options are mutually exclusive; if you specify more than one output type selector the last one takes precedence.

Several output types represent charset name used by some other program, but not all these programs know all the charsets which Enca recognises. Be warned, Enca makes no difference between unrecognised charset and charset having no name in given namespace in such situations. -d, —details It used to print a few pages of details about the guessing process, but since Enca is just a program linked against Enca library, this is not possible and this option is roughly equivalent to —human-readable, except it reports failure reason when Enca doesn’t recoginize the encoding. -e, —enca-name Prints Enca’s nice name of the charset, i.e., perhaps the most generally accepted and more or less human-readable charset identifier, with surfaces appended.

This name is used when calling an external converter, too. -f, —human-readable Prints verbal description of the detected charset and surfaces—something a human understands best. This is the default behaviour.

The precise format is following: the first line contains charset name alone, and it’s followed by zero or more indented lines containing names of detected surfaces. This format is not, however, suitable or intended for further machine-processing, and the verbal charset descriptions are like to change in the future. -i, —iconv-name Prints how iconv(3) (and/or iconv(1)) calls the detected charset. More precisely, it prints one, more or less arbitrarily chosen, alias accepted by iconv. A charset unknown to iconv counts as unknown.

This output type makes sense only when Enca is compiled with iconv support (feature +iconv-interface). -r, —rfc1345-name Prints RFC

1345 charset name. When such a name doesn’t exist because RFC

1345 doesn’t define given encoding, some other name defined in some other RFC or just the name which author consideres ‘the most canonical’, is printed.

1345 doesn’t define surfaces, no surface info is appended. -m, —mime-name Prints preferred MIME name of detected charset. This is the name you should normally use when fixing e-mails or web pages.

A charset not present in http://www.iana.org/assignments/character-sets counts as unknown. -s, —cstocs-name Prints how cstocs(1) calls the detected charset. A charset unknown to cstocs counts as unknown. -n, —name=WORD Prints charset (encoding) name selected by WORD (can be abbreviated as long as is unambiguous). For names listed above, —name=WORD is equivalent to —WORD.

Using aliases as the output type causes Enca to print list of all accepted aliases of detected charset. -x, —convert-to=[..]ENC Converts file to encoding ENC.

The optional ‘..’ before encoding name has no special meaning, except you can use it to remind yourself that, unlike in recode(1), you should specify desired encoding, instead of current.

You can use recode(1) recoding chains or any other kind of braindead recoding specification for ENC, provided that you tell Enca to use some tool understanding it for conversion (see section CONVERSION).

When Enca fails to determine the encoding, it prints a warning and leaves the the file as is; when it is run as a filter it tries to do its best to copy standard input to standard output unchanged. Nevertheless, you should not rely on it and do backup.

Guessing parameters

There’s only one: -L setting language of input files. This option is mandatory (but see below). -L, —language=LANG Sets language of input files to LANG.

More precisely, LANG can be any valid locale name (or alias with +locale-alias feature) of some supported language. You can also specify ‘none’ as language name, only multibyte encodings are recognised then. Run

enca —list languages

to get list of supported languages. When you don’t specify any language Enca tries to guess your language from locale settings and assumes input files use this language. See section LANGUAGES for details.

Conversion parameters

give you finer control of how charset conversion will be performed. They don’t affect anything when -x is not specified as output type. Please see section CONVERSION for the gory conversion details. -C, —try-converters=LIST Appends comma separated LIST to the list of converters that will be tried when you ask for conversion. Their names can be abbreviated as long as they are unambiguous. Run

enca —list converters

to get list of all valid converter names (and see section CONVERSION for their description).

The default list depends on how Enca has been compiled, run

to find out default converter list.

Note the default list is used only when you don’t specify -C at all. Otherwise, the list is built as if it were initially empty and every -C adds new converter(s) to it. Moreover, specifying none as converter name causes clearing the converter list. -E, —external-converter-program=PATH Sets external converter program name to PATH. Default external converter depends on how enca has been complied, and the possibility to use external converters may not be available at all. Run

to find out default converter program in your enca build.

General options

don’t fit to other option categories. -p, —with-filename Forces Enca to prefix each result with corresponding file name. By default, Enca prefixes results with filenames when run on multiple files.

Standard input is printed as STDIN and standard output as STDOUT (the latter can be probably seen in error messages only). -P, —no-filename Forces Enca to not prefix results with file names. By default, Enca doesn’t prefix result with file name when run on a single file (including standard input). -V, —verbose Increases verbosity level (each use increases it by one).

Currently this option in not very useful because different parts of Enca respond differently to the same verbosity level, mostly not at all.

Listings

are all terminal, i.e. when Enca encounters some of them it prints the required listing and terminates without processing any following options. -h, —help Prints brief usage help. -G, —license Prints full Enca license (through a pager, if possible). -l, —list=WORD Prints list specified by WORD (can be abbreviated as long as it is unambiguous). Available lists include:

built-in-charsets. All encodings convertible by built-in converter, by group (both input and output encoding must be from this list and belong to the same group for internal conversion).

built-in-encodings. Equivalent to built-in-charsets, but considered obsolete; will be accepted with a warning, for a while.

converters. All valid converter names (to be used with -C).

charsets. All encodings (charsets). You can select what names will be printed with —name or any name output type selector (of course, only encodings having a name in given namespace will be printed then), the selector must be specified before —list.

encodings. Equivalent to charsets, but considered obsolete; will be accepted with a warning, for a while.

languages. All supported languages together with charsets belonging to them. Note output type selects language name style, not charset name style here.

names. All possible values of —name option.

lists. All possible values of this option. (Crazy?)

surfaces. All surfaces Enca recognises. -v, —version Prints program version and list of features (see section FEATURES).

Conversion

Though Enca has been originally designed as a tool for guessing encoding only, it now features several methods of charset conversion. You can control which of them will be used with -C.

Enca sequentially tries converters from the list specified by -C until it finds some that is able to perform required conversion or until it exhausts the list. You should specify preferred converters first, less preferred later. External converter (extern) should be always specified last, only as last resort, since it’s usually not possible to recover when it fails. The default list of converters always starts with built-in and then continues with the first one available from: librecode, iconv, nothing.

It should be noted when Enca says it is not able to perform the conversion it only means none of the converters is able to perform it. It can be still possible to perform the required conversion in several steps, using several converters, but to figure out how, human intelligence is probably needed.

Built-in converter

is the simplest and far the fastest of all, can perform only a few byte-to-byte conversions and modifies files directly in place (may be considered dangerous, but is pretty efficient). You can get list of all encodings it can convert with

Beside speed, its main advantage (and also disadvantage) is that it doesn’t care: it simply converts characters having a representation in target encoding, doesn’t touch anything else and never prints any error message.

This converter can be specified as built-in with -C.

Librecode converter

is an interface to GNU recode library, that does the actual recoding job. It may or may not be compiled in; run

to find out its availability in your enca build (feature +librecode-interface).

You should be familiar with recode(1) before using it, since recode is a quite sophisticated and powerful charset conversion tool. You may run into problems using it together with Enca particularly because Enca’s support for surfaces not 100% compatible, because recode tries too hard to make the transformation reversible, because it sometimes silently ignores I/O errors, and because it’s incredibily buggy. Please see GNU recode info pages for details about recode library.

This converter can be specified as librecode with -C.

Iconv converter

is an interface to the UNIX98 iconv(3) conversion functions, that do the actual recoding job. It may or may not be compiled in; run

to find out its availability in your enca build (feature +iconv-interface).

While iconv is present on most today systems it only rarely offer some useful set of available conversions, the only notable exception being iconv from GNU libc. It is usually quite picky about surfaces, too (while, at the same time, not implementing surface conversion). It however probably represents the only standard(ized) tool able to perform conversion from/to Unicode. Please see iconv documentation about for details about its capabilities on your particular system.

This converter can be specified as iconv with -C.

External converter

is an arbitrary external conversion tool that can be specified with -E option (at most one can be defined simultaneously). There are some standard, provided together with enca: cstocs, recode, map, umap, and piconv. All are wrapper scripts: for cstocs(1), recode(1), map(1), umap(1), and piconv(1).

Please note enca has little control what the external converter really does. If you set it to /bin/rm you are fully responsible for the consequences.

If you want to make your own converter to use with enca, you should know it is always called

where CONVERTER is what has been set by -E, ENC_CURRENT is detected encoding, ENC is what has been specified with -x, and FILE is the file to convert, i.e. it is called for each file separately. The optional fourth parameter, —, should cause (when present) sending result of conversion to standard output instead of overwriting the file FILE. The converter should also take care of not changing file permissions, returning error code

1 when it fails and cleaning its temporary files. Please see the standard external converters for examples.

This converter can be specified as extern with -C.

Default target charset

The starightforward way of specifying target charset is the -x option, which overrides any defaults. When Enca is called as enconv, default target charset is selected exactly the same way as recode(1) does it.

If the DEFAULT_CHARSET environment variable is set, it’s used as the target charset.

Otherwise, if you system provides the nl_langinfo(3) function, current locale’s native charset is used as the target charset.

When both methods fail, Enca complains and terminates.

Reversibility notes

If reversibility is crucial for you, you shouldn’t use enca as converter at all (or maybe you can, with very specifically designed recode(1) wrapper). Otherwise you should at least know that there four basic means of handling inconvertible character entities:

fail—this is a possibility, too, and incidentally it’s exactly what current GNU libc iconv implementation does (recode can be also told to do it)

don’t touch them—this is what enca internal converter always does and recode can do; though it is not reversible, a human being is usually able to reconstruct the original (at least in principle)

approximate them—this is what cstocs can do, and recode too, though differently; and the best choice if you just want to make the accursed text readable

drop them out—this is what both recode and cstocs can do (cstocs can also replace these characters by some fixed character instead of mere ignoring); useful when the to-be-omitted characters contain only noise.

Please consult your favourite converter manual for details of this issue. Generally, if you are not lucky enough to have all convertible characters in you file, manual intervention is needed anyway.

Performance notes

Poor performance of available converters has been one of main reasons for including built-in converter in enca. Try to use it whenever possible, i.e. when files in consideration are charset-clean enough or charset-messy enough so that its zero built-in intelligence doesn’t matter. It requires no extra disk space nor extra memory and can outperform recode(1) more than 10 times on large files and Perl version (i.e. the faster one) of cstocs(1) more than 400 times on small files (in fact it’s almost as fast as mere cp(1)).

Try to avoid external converters when it’s not absolutely necessary since all the forking and moving stuff around is incredibily slow.

Encodings

You can get list of recognised character sets with

and using —name parameter you can select any name you want to be used in the listing. You can also list all surfaces with

Encoding and surface names are case insensitive and non-alphanumeric characters are not taken into account. However, non-alphanumeric characters are mostly not allowed at all. The only allowed are: ‘-‘, ‘_’, ‘.’, ‘:’, and

‘/’ (as charset/surface separator). So ‘ibm852’ and ‘IBM-852’ are the same, while ‘IBM 852’ is not accepted.

Charsets

Following list of recognised charsets uses Enca’s names (-e) and verbal descriptions as reported by Enca (-f):

| ASCII | 7bit ASCII characters |

| ISO-8859-2 | ISO 8859-2 standard; ISO Latin 2 |

| ISO-8859-4 | ISO 8859-4 standard; Latin 4 |

| ISO-8859-5 | ISO 8859-5 standard; ISO Cyrillic |

| ISO-8859-13 | ISO 8859-13 standard; ISO Baltic; Latin 7 |

| ISO-8859-16 | ISO 8859-16 standard |

| CP1125 | MS-Windows code page 1125 |

| CP1250 | MS-Windows code page 1250 |

| CP1251 | MS-Windows code page 1251 |

| CP1257 | MS-Windows code page 1257; WinBaltRim |

| IBM852 | IBM/MS code page 852; PC (DOS) Latin 2 |

| IBM855 | IBM/MS code page 855 |

| IBM775 | IBM/MS code page 775 |

| IBM866 | IBM/MS code page 866 |

| baltic | ISO-IR-179; Baltic |

| KEYBCS2 | Kamenicky encoding; KEYBCS2 |

| macce | Macintosh Central European |

| maccyr | Macintosh Cyrillic |

| ECMA-113 | Ecma Cyrillic; ECMA-113 |

| KOI-8_CS_2 | KOI8-CS2 code (‘T602’) |

| KOI8-R | KOI8-R Cyrillic |

| KOI8-U | KOI8-U Cyrillic |

| KOI8-UNI | KOI8-Unified Cyrillic |

| TeX | (La)TeX control sequences |

| UCS-2 | Universal character set 2 bytes; UCS-2; BMP |

| UCS-4 | Universal character set 4 bytes; UCS-4; ISO-10646 |

| UTF-7 | Universal transformation format 7 bits; UTF-7 |

| UTF-8 | Universal transformation format 8 bits; UTF-8 |

| CORK | Cork encoding; T1 |

| GBK | Simplified Chinese National Standard; GB2312 |

| BIG5 | Traditional Chinese Industrial Standard; Big5 |

| HZ | HZ encoded GB2312 |

| unknown | Unrecognized encoding |

where unknown is not any real encoding, it’s reported when Enca is not able to give a reliable answer.

Surfaces

Enca has some experimental support for so-called surfaces (see below). It detects following surfaces (not all can be applied to all charsets):

| /CR | CR line terminators |

| /LF | LF line terminators |

| /CRLF | CRLF line terminators |

| N.A. | Mixed line terminators |

| N.A. | Surrounded by/intermixed with non-text data |

| /21 | Byte order reversed in pairs (1,2 -> 2,1) |

| /4321 | Byte order reversed in quadruples (1,2,3,4 -> 4,3,2,1) |

| N.A. | Both little and big endian chunks, concatenated |

| /qp | Quoted-printable encoded |

Note some surfaces have N.A. in place of identifier—they cannot be specified on command line, they can only be reported by Enca. This is intentional because they only inform you why the file cannot be considered surface-consistent instead of representing a real surface.

Each charset has its natural surface (called ‘implied’ in recode) which is not reported, e.g., for IBM 852 charset it’s ‘CRLF line terminators’. For UCS encodings, big endian is considered as natural surface; unusual byte orders are constructed from 21 and 4321 permutations: 2143 is reported simply as 21, while 3412 is reported as combination of 4321 and 21.

Doubly-encoded UTF-8 is neither charset nor surface, it’s just reported.

About charsets, encodings and surfaces

Charset is a set of character entities while encoding is its representation in the terms of bytes and bits. In Enca, the word encoding means the same as ‘representation of text’, i.e. the relation between sequence of character entities constituting the text and sequence of bytes (bits) constituting the file.

So, encoding is both character set and so-called surface (line terminators, byte order, combining, Base64 transformation, etc.). Nevertheless, it proves convenient to work with some

The only good thing about surfaces is: when you don’t start playing with them, neither Enca won’t start and it will try to behave as much as possible as a surface-unaware program, even when talking to recode.

Languages

Enca needs to know the language of input files to work reliably, at least in case of regular 8bit encoding. Multibyte encodings should be recognised for any Latin, Cyrillic or Greek language.

You can (or have to) use -L option to tell Enca the language. Since people most often work with files in the same language for which they have configured locales, Enca tries tries to guess the language by examining value of LC_CTYPE and other locale categories (please see locale(7)) and using it for the language when you don’t specify any. Of course, it may be completely wrong and will give you nonsense answers and damage your files, so please don’t forget to use the -L option. You can also use ENCAOPT environment variable to set a default language (see section ENVIRONMENT).

Following languages are supported by Enca (each language is listed together with supported 8bit encodings).

| Belarussian | CP1251 IBM866 ISO-8859-5 KOI8-UNI maccyr IBM855 |

| Bulgarian | CP1251 ISO-8859-5 IBM855 maccyr ECMA-113 |

| Czech | ISO-8859-2 CP1250 IBM852 KEYBCS2 macce KOI-8_CS_2 CORK |

| Estonian | ISO-8859-4 CP1257 IBM775 ISO-8859-13 macce baltic |

| Croatian | CP1250 ISO-8859-2 IBM852 macce CORK |

| Hungarian | ISO-8859-2 CP1250 IBM852 macce CORK |

| Lithuanian | CP1257 ISO-8859-4 IBM775 ISO-8859-13 macce baltic |

| Latvian | CP1257 ISO-8859-4 IBM775 ISO-8859-13 macce baltic |

| Polish | ISO-8859-2 CP1250 IBM852 macce ISO-8859-13 ISO-8859-16 baltic CORK |

| Russian | KOI8-R CP1251 ISO-8859-5 IBM866 maccyr |

| Slovak | CP1250 ISO-8859-2 IBM852 KEYBCS2 macce KOI-8_CS_2 CORK |

| Slovene | ISO-8859-2 CP1250 IBM852 macce CORK |

| Ukrainian | CP1251 IBM855 ISO-8859-5 CP1125 KOI8-U maccyr |

| Chinese | GBK BIG5 HZ |

| none |

The special language none can be shortened to __, it contains no 8bit encodings, so only multibyte encodings are detected.

Features

Several Enca’s features depend on what is available on your system and how it was compiled. You can get their list with

Plus sign before a feature name means it’s available, minus sign means this build lacks the particular feature.

librecode-interface. Enca has interface to GNU recode library charset conversion functions.

iconv-interface. Enca has interface to UNIX98 iconv charset conversion functions.

external-converter. Enca can use external conversion programs (if you have some suitable installed).

language-detection. Enca tries to guess language (-L) from locales. You don’t need the —language option, at least in principle.

locale-alias. Enca is able to decrypt locale aliases used for language names.

target-charset-auto. Enca tries to detect your preferred charset from locales. Option —auto-convert and calling Enca as enconv works, at least in principle.

ENCAOPT. Enca is able to correctly parse this environment variable before command line parameters. Simple stuff like ENCAOPT=»-L uk» will work even without this feature.

Environment

The variable ENCAOPT can hold set of default Enca options. Its content is interpreted before command line arguments. Unfortunately, this doesn’t work everywhere (must have +ENCAOPT feature).

LC_CTYPE, LC_COLLATE, LC_MESSAGES (possibly inherited from LC_ALL or LANG) is used for guessing your language (must have +language-detection feature).

The variable DEFAULT_CHARSET can be used by enconv as the default target charset.

Diagnostics

Enca returns exit code

0 when all input files were successfully proceeded (i.e. all encodings were detected and all files were converted to required encoding, if conversion was asked for). Exit code

1 is returned when Enca wasn’t able to either guess encoding or perform conversion on any input file because it’s not clever enough. Exit code

2 is returned in case of serious (e.g. I/O) troubles.

Security

It should be possible to let Enca work unattended, it’s its goal. However:

There’s no warranty the detection works 100%. Don’t bet on it, you can easily lose valuable data.

Don’t use enca (the program), link to libenca instead if you want anything resembling security. You have to perform the eventual conversion yourself then.

Don’t use external converters. Ideally, disable them compile-time.

Be aware of ENCAOPT and all the built-in automagic guessing various things from environment, namely locales.

See Also

Known Bugs

It has too many unknown bugs.

The idea of using LC_* value for language is certainly braindead. However I like it.

It can’t backup files before mangling them.

In certain situations, it may behave incorrectly on >31bit file systems and/or over NFS (both untested but shouldn’t cause problems in practice).

Built-in converter does not convert character ‘ch’ from KOI8-CS2, and possibly some other characters you’ve probably never heard about anyway.

EOL type recognition works poorly on Quoted-printable encoded files. This should be fixed someday.

There are no command line options to tune libenca parameters. This is intentional (Enca should DWIM) but sometimes this is a nuisance.

The manual page is too long, especially this section. This doesn’t matter since nobody does read it.

Send bug reports to .

Trivia

Enca is Extremely Naive Charset Analyser. Nevertheless, the ‘enc’ originally comes from ‘encoding’ so the leading

‘e’ should be read as in ‘encoding’ not as in ‘extreme’.

Authors

David Necas (Yeti)

Unicode data has been generated from various (free) on-line resources or using GNU recode. Statistical data has been generated from various texts on the Net, I hope character counting doesn’t break anyone’s copyright.

Acknowledgements

Please see the file THANKS in distribution.

Copyright

Copyright В© 2000-2003 David Necas (Yeti).

Copyright В© 2009 Michal Cihar .

Enca is free software; you can redistribute it and/or modify it under the terms of version 2 of the GNU General Public License as published by the Free Software Foundation.

Enca is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with Enca; if not, write to the Free Software Foundation, Inc., 675 Mass Ave, Cambridge, MA 02139, USA.

Источник