- How to download a file with curl on Linux/Unix command line

- How to download a file with curl command

- Installing curl on Linux or Unix

- Verify installation by displaying curl version

- Downloading files with curl

- Resuming interrupted downloads with curl

- How to get a single file without giving output name

- Dealing with HTTP 301 redirected file

- Downloading multiple files or URLs using curl

- Grab a password protected file with curl

- Downloading file using a proxy server

- Examples

- Getting HTTP headers information without downloading files

- How do I skip SSL skip when using curl?

- Rate limiting download/upload speed

- Setting up user agent

- Upload files with CURL

- Make curl silent

- Conclusion

- 5 Linux Command Line Based Tools for Downloading Files and Browsing Websites

- 1. rTorrent

- Installation of rTorrent in Linux

- 2. Wget

- Installation of Wget in Linux

- Basic Usage of Wget Command

- 3. cURL

- Installation of cURL in Linux

- Basic Usage of cURL Command

- 4. w3m

- Installation of w3m in Linux

- Basic Usage of w3m Command

- 5. Elinks

- Installation of Elinks in Linux

- Basic Usage of elinks Command

- If You Appreciate What We Do Here On TecMint, You Should Consider:

How to download a file with curl on Linux/Unix command line

How to download a file with curl command

The basic syntax:

- Grab files with curl run: curl https://your-domain/file.pdf

- Get files using ftp or sftp protocol: curl ftp://ftp-your-domain-name/file.tar.gz

- You can set the output file name while downloading file with the curl, execute: curl -o file.pdf https://your-domain-name/long-file-name.pdf

- Follow a 301-redirected file while downloading files with curl, run: curl -L -o file.tgz http://www.cyberciti.biz/long.file.name.tgz

Let us see some examples and usage about the curl to download and upload files on Linux or Unix-like systems.

Installing curl on Linux or Unix

By default curl is installed on many Linux distros and Unix-like systems. But, we can install it as follows:

## Debian/Ubuntu Linux use the apt command/apt-get command ##

$ sudo apt install curl

## Fedora/CentOS/RHEL users try dnf command/yum command ##

$ sudo dnf install curl

## OpenSUSE Linux users try zypper command ##

$ sudo zypper install curl

Verify installation by displaying curl version

Type:

$ curl —version

We see:

Downloading files with curl

The command syntax is:

curl url —output filename

curl https://url -o output.file.name

Let us try to download a file from https://www.cyberciti.biz/files/sticker/sticker_book.pdf and save it as output.pdf

curl https://www.cyberciti.biz/files/sticker/sticker_book.pdf -o output.pdf

OR

curl https://www.cyberciti.biz/files/sticker/sticker_book.pdf —output output.pdf

The -o or —output option allows you to give the downloaded file a different name. If you do not provide the output file name curl will display it to the screen. Let us say you type:

curl —output file.html https://www.cyberciti.biz

We will see progress meter as follows:

The outputs indicates useful information such as:

- % Total : Total size of the whole expected transfer (if known)

- % Received : Currently downloaded number of bytes

- % Xferd : Currently uploaded number of bytes

- Average Dload : Average transfer speed of the entire download so far, in number of bytes per second

- Speed Upload : Average transfer speed of the entire upload so far, in number of bytes per second

- Time Total : Expected time to complete the operation, in HH:MM:SS notation for hours, minutes and seconds

- Time Spent : Time passed since the start of the transfer, in HH:MM:SS notation for hours, minutes and seconds

- Time Left : Expected time left to completion, in HH:MM:SS notation for hours, minutes and seconds

- Current Speed : Average transfer speed over the last 5 seconds (the first 5 seconds of a transfer is based on less time, of course) in number of bytes per second

Resuming interrupted downloads with curl

Pass the -C — to tell curl to automatically find out where/how to resume the transfer. It then uses the given output/input files to figure that out:

## Restarting an interrupted download is important task too ##

curl -C — —output bigfilename https://url/file

How to get a single file without giving output name

You can save output file as it is i.e. write output to a local file named like the remote file we get. For example, sticker_book.pdf is a file name for remote URL https://www.cyberciti.biz/files/sticker/sticker_book.pdf. One can save it sticker_book.pdf directly without specifying the -o or —output option by passing the -O (capital

curl -O https://www.cyberciti.biz/files/sticker/sticker_book.pdf

Downloading files with curl in a single shot

- No ads and tracking

- In-depth guides for developers and sysadmins at Opensourceflare✨

- Join my Patreon to support independent content creators and start reading latest guides:

- How to set up Redis sentinel cluster on Ubuntu or Debian Linux

- How To Set Up SSH Keys With YubiKey as two-factor authentication (U2F/FIDO2)

- How to set up Mariadb Galera cluster on Ubuntu or Debian Linux

- A podman tutorial for beginners – part I (run Linux containers without Docker and in daemonless mode)

- How to protect Linux against rogue USB devices using USBGuard

Join Patreon ➔

Dealing with HTTP 301 redirected file

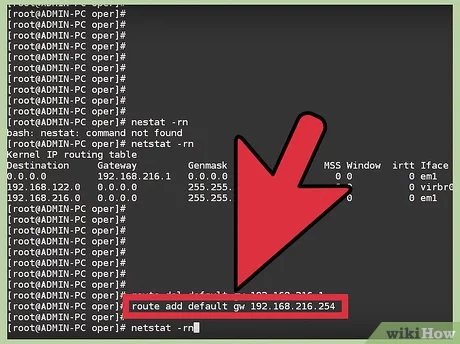

The remote HTTP server might send a different location status code when downloading files. For example, HTTP URLs are often redirected to HTTPS URLs with HTTP/301 status code. Just pass the -L follow the 301 (3xx) redirects and get the final file on your system:

curl -L -O http ://www.cyberciti.biz/files/sticker/sticker_book.pdf

Downloading multiple files or URLs using curl

Try:

curl -O url1 -O url2

curl -O https://www.cyberciti.biz/files/adduser.txt \

-O https://www.cyberciti.biz/files/test-lwp.pl.txt

One can use the bash for loop too:

How to download a file using curl and bash for loop

Grab a password protected file with curl

Try any one of the following syntax

curl ftp://username:passwd@ftp1.cyberciti.biz:21/path/to/backup.tar.gz

curl —ftp-ssl -u UserName:PassWord ftp://ftp1.cyberciti.biz:21/backups/07/07/2012/mysql.blog.sql.tar.gz

curl https://username:passwd@server1.cyberciti.biz/file/path/data.tar.gz

curl -u Username:Password https://server1.cyberciti.biz/file/path/data.tar.gz

Downloading file using a proxy server

Again syntax is as follows:

curl -x proxy-server-ip:PORT -O url

curl -x ‘http://vivek:YourPasswordHere@10.12.249.194:3128’ -v -O https://dl.cyberciti.biz/pdfdownloads/b8bf71be9da19d3feeee27a0a6960cb3/569b7f08/cms/631.pdf

How to use curl command with proxy username/password

Examples

curl command can provide useful information, especially HTTP headers. Hence, one can use such information for debugging server issues. Let us see some examples of curl commands. Pass the -v for viewing the complete request send and response received from the web server.

curl -v url

curl -o output.pdf -v https://www.cyberciti.biz/files/sticker/sticker_book.pdf

Getting HTTP headers information without downloading files

Another useful option is to fetch HTTP headers. All HTTP-servers feature the command HEAD which this uses to get nothing but the header of a document. For instance, when you want to view the HTTP response headers only without downloading the data or actual files:

curl -I url

curl -I https://www.cyberciti.biz/files/sticker/sticker_book.pdf -o output.pdf

Getting header information for given URL

How do I skip SSL skip when using curl?

If the remote server has a self-signed certificate you may want to skip the SSL checks. Therefore, pass pass the -k option as follows:

curl -k url

curl -k https://www.cyberciti.biz/

Rate limiting download/upload speed

You can specify the maximum transfer rate you want the curl to use for both downloads and uploads files. This feature is handy if you have a limited Internet bandwidth and you would like your transfer not to use your entire bandwidth. The given speed is measured in bytes/second, unless a suffix is appended. Appending ‘k’ or ‘K’ will count the number as kilobytes, ‘m’ or ‘M’ makes it megabytes, while ‘g’ or ‘G’ makes it gigabytes. For Examples: 200K, 3m and 1G:

curl —limit-rate

curl —limit-rate 200 https://www.cyberciti.biz/

curl —limit-rate 3m https://www.cyberciti.biz/

Setting up user agent

Some web application firewall will block the default curl user agent while downloading files. To avoid such problems pass the -A option that allows you to set the user agent.

curl -A ‘user agent name’ url

curl -A ‘Mozilla/5.0 (X11; Fedora; Linux x86_64; rv:66.0) Gecko/20100101 Firefox/66.0’ https://google.com/

Upload files with CURL

The syntax is as follows to upload files:

curl -F «var=@path/to/local/file.pdf» https://url/upload.php

For example, you can upload a file at

/Pictures/test.png to the server https://127.0.0.1/app/upload.php which processes file input with form parameter named img_file, run:

curl -F «img_file=@

/Pictures/test.png» https://127.0.0.1/app/upload.php

One can upload multiple files as follows:

curl -F «img_file1=@

/Pictures/test-1.png» \

-F «img_file2=@

Make curl silent

Want to make hide progress meter or error messages? Try passing the -s or —slient option to turn on curl quiet mode:

curl -s url

curl —silent —output filename https://url/foo.tar.gz

Conclusion

Like most Linux or Unix CLI utilities, you can learn much more about curl command by visiting this help page.

🐧 Get the latest tutorials on Linux, Open Source & DevOps via

Источник

5 Linux Command Line Based Tools for Downloading Files and Browsing Websites

Linux command-line, the most adventurous and fascinating part of GNU/Linux is a very cool and powerful tool. A command-line itself is very productive and the availability of various inbuilt and third-party command-line applications makes Linux robust and powerful. The Linux Shell supports a variety of web applications of various kinds be it torrent downloader, dedicated downloader, or internet surfing.

Here we are presenting 5 great command line Internet tools, which are very useful and prove to be very handy in downloading files in Linux.

1. rTorrent

rTorrent is a text-based BitTorrent client which is written in C++ aimed at high performance. It is available for most of the standard Linux distributions including FreeBSD and Mac OS X.

Installation of rTorrent in Linux

Check if rtorrent is installed correctly by running the following command in the terminal.

Functioning of rTorrent

Some of the useful Key-bindings and their use.

- CTRL+ q – Quit rTorrent Application

- CTRL+ s – Start Download

- CTRL+ d – Stop an active Download or Remove an already stopped Download.

- CTRL+ k – Stop and Close an active Download.

- CTRL+ r – Hash Check a torrent before Upload/Download Begins.

- CTRL+ q – When this key combination is executed twice, rTorrent shutdown without sending a stop Signal.

- Left Arrow Key – Redirect to Previous screen.

- Right Arrow Key – Redirect to Next Screen

2. Wget

Wget is a part of the GNU Project, the name is derived from World Wide Web (WWW). Wget is a brilliant tool that is useful for recursive download, offline viewing of HTML from a local Server and is available for most of the platforms be it Windows, Mac, Linux.

Wget makes it possible to download files over HTTP, HTTPS, and FTP. Moreover, it can be useful in mirroring the whole website as well as support for proxy browsing, pausing/resuming Downloads.

Installation of Wget in Linux

Wget being a GNU project comes bundled with Most of the Standard Linux Distributions and there is no need to download and install it separately. If in case, it’s not installed by default, you can still install it using apt, yum, or dnf.

Basic Usage of Wget Command

Download a single file using wget.

Download a whole website, recursively.

Download specific types of files (say pdf and png) from a website.

Wget is a wonderful tool that enables custom and filtered download even on a limited resource Machine. A screenshot of wget download, where we are mirroring a website (Yahoo.com).

For more such wget download examples, read our article that shows 10 Wget Download Command Examples.

3. cURL

a cURL is a command-line tool for transferring data over a number of protocols. cURL is a client-side application that supports protocols like FTP, HTTP, FTPS, TFTP, TELNET, IMAP, POP3, etc.

cURL is a simple downloader that is different from wget in supporting LDAP, POP3 as compared to others. Moreover, Proxy Downloading, pausing download, resuming download are well supported in cURL.

Installation of cURL in Linux

By default, cURL is available in most of the distribution either in the repository or installed. if it’s not installed, just do an apt or yum to get a required package from the repository.

Basic Usage of cURL Command

For more such curl command examples, read our article that shows 15 Tips On How to Use ‘Curl’ Command in Linux.

4. w3m

The w3m is a text-based web browser released under GPL. W3m support tables, frames, color, SSL connection, and inline images. W3m is known for fast browsing.

Installation of w3m in Linux

Again w3m is available by default in most of the Linux Distribution. If in case, it is not available you can always apt or yum the required package.

Basic Usage of w3m Command

5. Elinks

Elinks is a free text-based web browser for Unix and Unix-based systems. Elinks support HTTP, HTTP Cookies and also support browsing scripts in Perl and Ruby.

Tab-based browsing is well supported. The best thing is that it supports Mouse, Display Colours, and supports a number of protocols like HTTP, FTP, SMB, Ipv4, and Ipv6.

Installation of Elinks in Linux

By default elinks also available in most Linux distributions. If not, install it via apt or yum.

Basic Usage of elinks Command

That’s all for now. I’ll be here again with an interesting article which you people will love to read. Till then stay tuned and connected to Tecmint and don’t forget to give your valuable feedback in the comment section.

If You Appreciate What We Do Here On TecMint, You Should Consider:

TecMint is the fastest growing and most trusted community site for any kind of Linux Articles, Guides and Books on the web. Millions of people visit TecMint! to search or browse the thousands of published articles available FREELY to all.

If you like what you are reading, please consider buying us a coffee ( or 2 ) as a token of appreciation.

We are thankful for your never ending support.

Источник