- Delayed acks sent linux

- Delayed confirmation mechanism

- 1. Why does TCP delay confirmation cause delay?

- Delayed acknowledgment and Nagle algorithm

- Delayed acknowledgment and congestion control

- 2. Why is it 40ms? Can this time be adjusted?

- 3. Why does TCP_QUICKACK need to be reset after each call to recv?

- 4. Why aren’t all packages delayed confirmation?

- 5, on the TCP_CORK option

- Delayed ACK and TCP Performance

- uIP and NuttX

- uIP, Delayed ACKs, and Split Packets

- The NuttX TCP/IP Stack and Delay ACKs

- The NuttX Split Packet Configuration

- Write Buffering

Delayed acks sent linux

Case 1: A colleague writes a stress test program with the logic of: continuously sending N 132-byte packets per second, and then continuously receiving N 132-byte packets returned by the background service. The code is roughly as follows:

In the actual test, it is found that when N is greater than or equal to 3, after the second second, every third recv call will always block about 40 milliseconds, but when analyzing the server-side log, it is found that all requests are processed on the server side. The consumption is below 2ms.

The specific positioning process at that time was as follows: First try to trace the client process with strace, but the strange thing is: once the strace is attached to the process, all the transceivers are normal, there will be no blocking phenomenon, once the strace is exited, the problem reappears. Remind by colleagues, it is likely that strace changed some things in the program or system (this problem has not yet been clarified), so I used tcpdump to capture the packet analysis and found that the server backend is after the response packet is returned, the client side The data was not immediately acknowledged by ACK, but waited for approximately 40 milliseconds before being acknowledged. After Google, and consulted «TCP / IP Detailed Volume 1: Protocol», this is TCP’s Delayed Ack mechanism.

The solution is as follows: After the recv system call, call the setsockopt function once and set TCP_QUICKACK. The final code is as follows:

Case 2: When the performance of the CDKEY version of the marketing platform is tested, it is found that the demand distribution is abnormal: 90% of the requests are within 2ms, and the consumption is always between 38-42ms when 10% or so. This is a very good Regular number: 40ms. Because I have experienced Case 1 before, the guess is also due to the time-consuming problem caused by the delay confirmation mechanism. After a simple packet capture verification, the delay problem can be solved by setting the TCP_QUICKACK option.

Delayed confirmation mechanism

The principle of TCP/IP Explain 1: Protocol is described in detail in Chapter 19. TCP handles interactive data streams (ie, Interactive Data Flow, which is different from Bulk Data Flow, that is, block data stream, typical interaction. When the data stream is telnet, rlogin, etc., the Delayed Ack mechanism and the Nagle algorithm are used to reduce the number of small packets.

The book has already been very clear about the principles of these two mechanisms, and will not be repeated here. The subsequent part of this article will explain the TCP delay confirmation mechanism by analyzing the implementation of TCP/IP under Linux.

1. Why does TCP delay confirmation cause delay?

In fact, only the delay confirmation mechanism does not cause the request to be delayed (initially thought that it must wait until the ACK packet is sent, and the recv system call will return). In general, time-consuming growth can only occur when the mechanism is mixed with Nagle’s algorithm or congestion control (slow start or congestion avoidance). Let’s take a closer look at how it interacts:

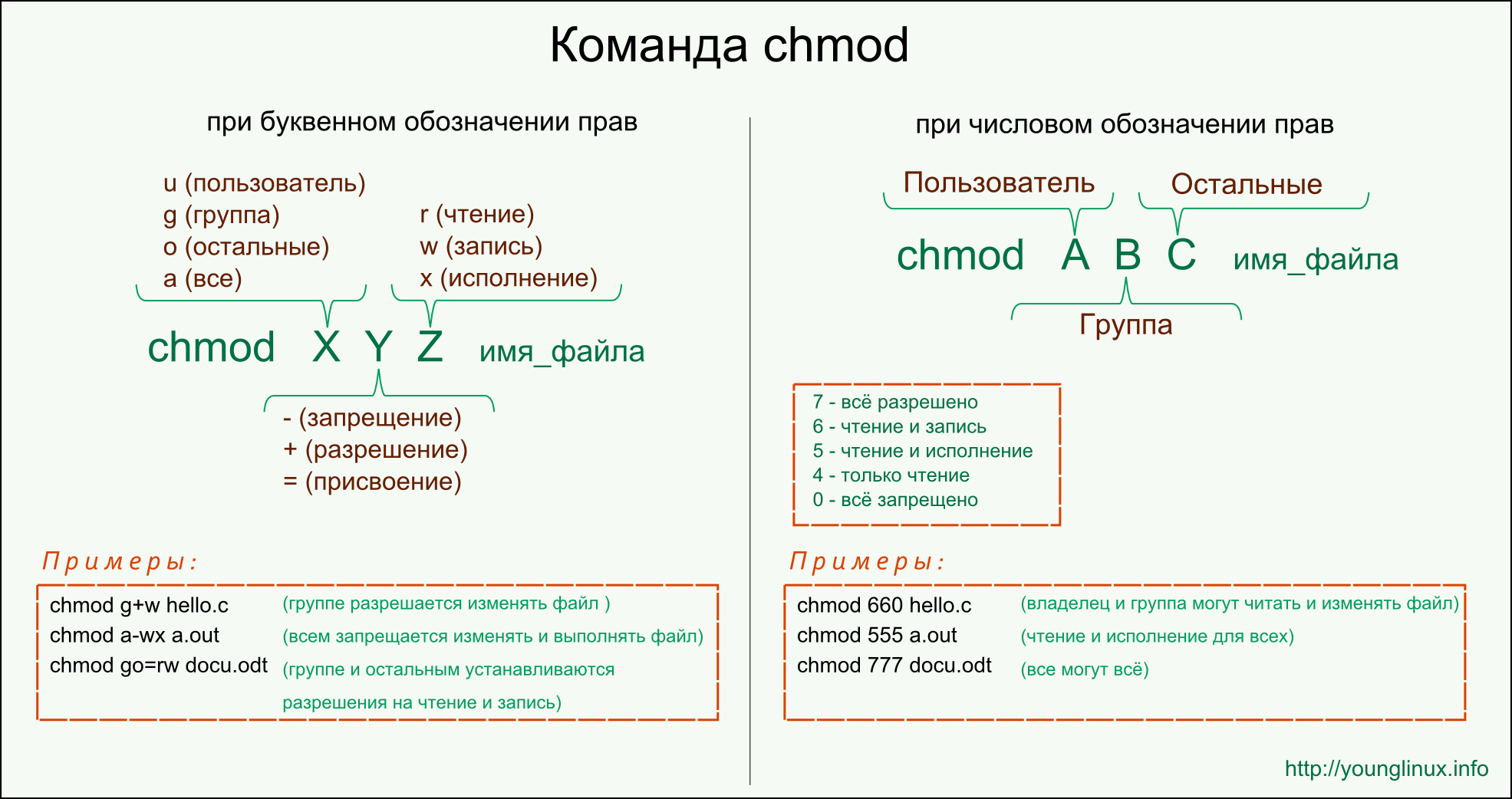

Delayed acknowledgment and Nagle algorithm

Let’s take a look at the rules of the Nagle algorithm (see the tcp_nagle_check function comment in the tcp_output.c file):

1) If the packet length reaches MSS, it is allowed to send;

2) If it contains FIN, it is allowed to send;

3) The TCP_NODELAY option is set, allowing sending;

4) When the TCP_CORK option is not set, if all outgoing packets are acknowledged, or all outgoing small packets (packet length less than MSS) are acknowledged, then transmission is allowed.

For rule 4), it means that there can be at most one unacknowledged small packet on a TCP connection, and no other small packets can be sent until the acknowledgement of the packet arrives. If the acknowledgment of a small packet is delayed (40ms in the case), the subsequent small packet transmission will be delayed accordingly. That is to say, the delayed acknowledgement affects not the packet that was delayed in confirmation, but the subsequent response packet.

From the above tcpdump capture packet analysis, the eighth packet is delayed acknowledgement, and the data of the ninth packet is already placed in the TCP send buffer on the server side (172.25.81.16) (the application layer calls Send has been returned), but according to the Nagle algorithm, the ninth packet needs to wait until the ACK of the first 7 packets (less than MSS) arrives.

Delayed acknowledgment and congestion control

We first use the TCP_NODELAY option to turn off the Nagle algorithm, and then analyze how the latency acknowledgment interacts with TCP congestion control.

Slow start: The sender of TCP maintains a congestion window, which is denoted as cwnd. The TCP connection is established. This value is initialized to one segment. Each time an ACK is received, the value is incremented by one segment. The sender takes the minimum value in the congestion window and the notification window (corresponding to the sliding window mechanism) as the upper transmission limit (the congestion window is the flow control used by the sender, and the notification window is the flow control used by the receiver). The sender starts to send 1 segment. After receiving the ACK, cwnd increases from 1 to 2, that is, 2 segments can be sent. When the ACKs of the two segments are received, cwnd is increased to 4. That is, exponential growth: for example, within the first RTT, a packet is sent, and its ACK is received, cwnd is incremented by 1, and within the second RTT, two packets can be sent and the corresponding two ACKs are received, then cwnd each When an ACK is received, it increases by 1, and eventually becomes 4, achieving an exponential increase.

In Linux implementations, not every time an ACK packet is received, cwnd is incremented by 1. If no other packets are waiting to be ACKed when the ACK is received, it does not increase.

I use the test code of Case 1. In the actual test, cwnd starts from the initial value of 2 and finally holds the value of 3 segments. The tcpdump results are as follows:

The packet in the above table is in the case of setting TCP_NODELAY, and cwnd has grown to 3. After the 7th, 8th, and 9th are issued, it is limited by the size of the congestion window. Even if the TCP buffer has data to send, it cannot continue to send. That is, the 11th packet must wait until the 10th packet arrives before it can be sent, and the 10th packet obviously has a 40ms delay.

Note: The TCP_INFO option (man 7 tcp) of getsockopt can be used to view the details of the TCP connection, such as the current congestion window size, MSS, and so on.

2. Why is it 40ms? Can this time be adjusted?

First of all, in the official document of redhat, there are the following instructions:

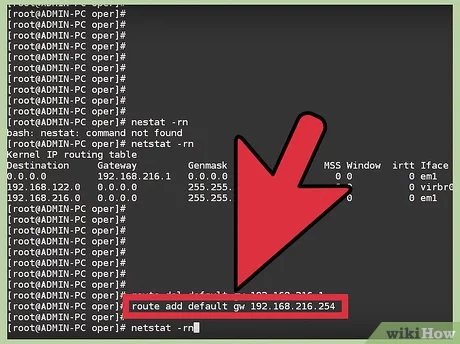

Some applications may cause a certain delay due to TCP’s Delayed Ack mechanism when sending small packets. Its value defaults to 40ms. The minimum delay confirmation time at the system level can be adjusted by modifying tcp_delack_min. E.g:

# echo 1 > /proc/sys/net/ipv4/tcp_delack_min

That is, it is expected to set the minimum delay acknowledgment timeout time to 1ms.

However, under the slackware and suse systems, this option was not found, which means that the minimum value of 40ms, under these two systems, cannot be adjusted through configuration.

There is a macro definition under linux-2.6.39.1/net/tcp.h:

Note: The Linux kernel issues timer interrupt (IRQ 0) every fixed period. HZ is used to define timer interrupts every second. For example, HZ is 1000, which represents 1000 timer interrupts per second. HZ can be set when the kernel is compiled. For systems running on our existing servers, the HZ value is 250.

From this, the minimum delay confirmation time is 40ms.

The delayed acknowledgement time of the TCP connection is generally initialized to a minimum value of 40 ms, and then continuously adjusted according to parameters such as the retransmission timeout period (RTO) of the connection, the time interval between the last received data packet and the current reception of the data packet. Specific adjustment algorithm, you can refer to linux-2.6.39.1/net/ipv4/tcp_input.c, Line 564 tcp_event_data_recv function.

3. Why does TCP_QUICKACK need to be reset after each call to recv?

In man 7 tcp, there are the following instructions:

The manual clearly states that TCP_QUICKACK is not permanent. So what is its specific implementation? Refer to the setsockopt function for the implementation of the TCP_QUICKACK option:

In fact, the linux socket has a pingpong attribute to indicate whether the current link is an interactive data stream. If the value is 1, it indicates that the interactive data stream will use a delayed acknowledgment mechanism. But the value of pingpong will change dynamically. For example, when a TCP link is to send a packet, the following function (linux-2.6.39.1/net/ipv4/tcp_output.c, Line 156) is executed:

The last two lines of code indicate that if the current time and the last time the packet was received were less than the calculated delay acknowledgment timeout, the interactive data flow mode was re-entered. It can also be understood that when the delayed acknowledgement mechanism is confirmed to be valid, it will automatically enter the interactive.

From the above analysis, the TCP_QUICKACK option needs to be reset after each call to recv.

4. Why aren’t all packages delayed confirmation?

In the TCP implementation, use tcp_in_quickack_mode (linux-2.6.39.1/net/ipv4/tcp_input.c, Line 197) to determine if you need to send an ACK immediately. Its function is implemented as follows:

Two conditions are required to be considered as the quickack mode:

1. pingpong is set to 0.

2, the quick confirmation number (quick) must be non-zero.

The value of pingpong is described earlier. The quick attribute has the following comment in the code: scheduled number of quick acks, which is the number of packets that are quickly confirmed. Each time you enter the quickack mode, quick is initialized to receive the window divided by 2 times the MSS value (linux-2.6.39.1/net /ipv4/tcp_input.c, Line 174), each time an ACK packet is sent, the quick is decremented by 1.

5, on the TCP_CORK option

The TCP_CORK option, like TCP_NODELAY, controls Nagleization.

1. Turning on the TCP_NODELAY option means that no matter how small the packet is, it is sent immediately (regardless of the congestion window).

2. If you compare a TCP connection to a pipe, the TCP_CORK option acts like a plug. Setting the TCP_CORK option is to plug the pipe with a plug, and to cancel the TCP_CORK option, the plug is unplugged.

For example, the following code:

When the TCP_CORK option is set, the TCP link will not send any packets, ie it will only be sent when the amount of data reaches the MSS. When the data transfer is complete, it is usually necessary to cancel the option so that it is plugged, but not enough packets of MSS size can be sent out in time. In order to improve performance and throughput, Web Server and file server generally use this option.

Источник

Delayed ACK and TCP Performance

uIP and NuttX

The heart of the NuttX IP stack derived from Adam Dunkel’s tiny uIP stack back at version 1.0.

The NuttX TCP/IP stack contains the uIP TCP state machine and some uIP «ways of doing things,» but otherwise, there is now little in common between these two designs.

NOTE: uIP is also built into Adam Dunkel’s Contiki operating system.

uIP, Delayed ACKs, and Split Packets

In uIP, TCP packets are sent and ACK’ed one at a time.

That is, after one TCP packet is sent, the next packet cannot be sent until the previous packet has been ACKed by the receiving side.

The TCP protocol, of course, supports sending multiple packets which can be ACKed be the receiving time asynchronously.

This one-packet-at-a-time logic is a simplification in the uIP design; because of this, uIP needs only a single packet buffer any you can use uIP in even the tiniest environments.

This is a good thing for the objectives of uIP.

Improvements in packet buffering is the essential improvement that you get if upgrade from Adam Dunkel’s uIP to his lwIP stack.

The price that you pay is in memory usage.

This one-at-a-time packet transfer does create a performance problem for uIP:

RFC 1122 states that a host may delay ACKing a packet for up to 500ms but must respond with an ACK to every second segment.

In the baseline uIP, the effectively adds a one half second delay between the transfer of every packet to a recipient that employs this delayed ACK policy!

uIP has an option to work around this:

It has logic that can be enable to split each packet into half, sending half as much data in each packet.

Sending more, smaller packets does not sound like a performance improvement.

This tricks the recipient that follows RFC 1122 into receiving the two, smaller back-to-back packets and ACKing the second immediately.

References: uip-split.c and uip-split.h .

The NuttX TCP/IP Stack and Delay ACKs

The NuttX, low-level TCP/IP stack does not have the limitations of the uIP TCP/IP stack.

It can send numerous TCP/IP packets regardless of whether they have been ACKed or not.

That is because in NuttX, the accounting for which packets have been ACKed and which have not has been moved to a higher level in the architecture.

NuttX includes a standard, BSD socket interface on top of the low-level TCP/IP stack.

It is in this higer-level, socket layer where the ACK accounting is done, specifically in the function send() .

If you send a large, multi-packet buffer via send() , it will be broken up into individual packets and each packet will be sent as quickly as possible, with no concern for whether the previous packet has been ACKed or not.

However, the NuttX send() function will not return to the caller until the final packet has been ACKed.

It does this to assure that the callers data was sent succeffully (or not).

This behavior means that if an odd number of packets were sent, there could still be a delay after the final packet before send() receives the ACK and returns.

So the NuttX approach is similar to the uIP way of doing things, but does add one more buffer, the user provided buffer to send() , that can be used to improve TCP/IP performance (of course, this user provided buffer is also required by in order to be compliant with send()»» specification .

The NuttX Split Packet Configuration

But what happens if all of the user buffer is smaller than the MSS of one TCP packet?

Suppose the MTU is 1500 and the user I/O buffer is only 512 bytes?

In this case, send() performance degenerates to the same behavior as uIP:

An ACK is required for each packet before send() can return and before send() can be called again to send the next packet.

And the fix? A fix has recently been contributed by Yan T that works in a similar way to uIP split packet logic:

In send() , the logic normally tries to send a full packet of data each time it has the opportunity to do so.

However, if the configuration option CONFIG_NET_TCP_SPLIT=y is defined, the behavior of send() will change in the following way:

- send() will keep track of even and odd packets; even packets being those that we do not expect to be ACKed and odd packets being the those that we do expect to be ACKed.

- send() will then reduce the size of even packets as necessary to assure that an even number of packets is always sent. Every call to send will result in an even number of packets being sent.

This clever solution tricks the RFC 1122 recipient in the same way that uIP split logic does.

So if you are working with hosts the following the RFC 1122 ACKing behavior and you have MSS sizes that are larger that the average size of the user buffers, then your throughput can probably be greatly improved by enabling CONFIG_NET_TCP_SPLIT=y

NOTE: NuttX is not an RFC 1122 recipient; NuttX will ACK every TCP/IP packet that it receives.

Write Buffering

The best technical solution to the delayed ACK problem would be to support write buffering.

Write buffering is enabled with CONFIG_NET_TCP_WRITE_BUFFERS . If this option is selected, the NuttX networking layer will pre-allocate several write buffers at system intialization time. The sending a buffer of data then works like this:

- send() (1) obtains a pre-allocated write buffer from a free list, and then (2) simply copies the buffer of data that the user wishes to send into the allocated write buffer. If no write buffer is available, send() would have to block waiting for free write buffer space.

- send() then (3) adds the write buffer to a queue of outgoing data for a TCP socket. Each open TCP socket has to support such a queue. send() could then (4) return success to the caller (even thought the transfer could still fail later).

- Logic outside of the send() implementation manages the actual transfer of data from the write buffer. When the Ethernet driver is able to send a packet on the TCP connection, this external logic (5) copies a packet of data from the write buffer so that the Ethernet driver can perform the transmission (a zero-copy implementation would be preferable). Note that the data has to remain in the write buffer for now; it may need to be re-transmitted.

- This external logic would also manage the receipt TCP ACKs. When TCP peer acknowledges the receipt of data, the acknowledged portion of the data can the (6) finally be deleted from the write buffer.

The following options configure TCP write buffering:

- CONFIG_NET_TCP_WRITE_BUFSIZE : The size of one TCP write buffer.

- CONFIG_NET_NTCP_WRITE_BUFFERS : The number of TCP write buffers (may be zero to disable TCP/IP write buffering)

NuttX also supports TCP read-ahead buffering. This option is enabled with CONFIG_NET_TCP_READAHEAD . TCP read-ahead buffer is necessary on TCP connections; otherwise data received while there is no recv() in place would be lost. For consistency, it would be best if such a TCP write buffer implementation worked in a manner similar to the existing TCP read-ahead buffering.

The following lists the NuttX configuration options available to configure the TCP read-ahead buffering feature:

- CONFIG_NET_TCP_READAHEAD_BUFSIZE : The size of one TCP read-ahead buffer.

- CONFIG_NET_NTCP_READAHEAD_BUFFERS : The number of TCP read-ahead buffers (may be zero to disable TCP/IP read-ahead buffering)

A future enhancement is to combine the TCP write buffer management logic and the TCP read-ahead buffer management so that one common pool of buffers can be used for both functions (this would probably also require additional logic to throttle read-buffering so that received messages do not consume all of the buffers).

Источник