- Migrate Cluster Roles to Windows Server 2012 R2

- Operating system requirements for clustered roles and feature migrations

- Target audience

- What this guide does not provide

- Planning considerations for migrations between failover clusters

- Migration scenarios that use the Copy Cluster Roles Wizard

- Failover Clustering Overview

- Feature description

- Practical applications

- New and changed functionality

- Hardware requirements

- Software requirements

- Server Manager information

- See also

- Network Recommendations for a Hyper-V Cluster in Windows Server 2012

- Overview of different network traffic types

- Management traffic

- Cluster traffic

- Live migration traffic

- Storage traffic

- Replica traffic

- Virtual machine access traffic

- How to isolate the network traffic on a Hyper-V cluster

- Isolate traffic on the management network

- Isolate traffic on the cluster network

- Isolate traffic on the live migration network

- Isolate traffic on the storage network

- Isolate traffic for replication

- NIC Teaming (LBFO) recommendations

- Quality of Service (QoS) recommendations

- Virtual machine queue (VMQ) recommendations

- Example of converged networking: routing traffic through one Hyper-V virtual switch

- Appendix: Encryption

- Cluster traffic

- Live migration traffic

- SMB traffic

- Replica traffic

Migrate Cluster Roles to Windows Server 2012 R2

Applies To: Windows Server 2012 R2

This guide provides step-by-step instructions for migrating clustered services and applications to a failover cluster running Windows Server 2012 R2 by using the Copy Cluster Roles Wizard. Not all clustered services and applications can be migrated using this method. This guide describes supported migration paths and provides instructions for migrating between two multi-node clusters or performing an in-place migration with only two servers. Instructions for migrating a highly available virtual machine to a new failover cluster, and for updating mount points after a clustered service migration, also are provided.

Operating system requirements for clustered roles and feature migrations

The Copy Cluster Roles Wizard supports migration to a cluster running Windows Server 2012 R2 from a cluster running any of the following operating systems:

Windows ServerВ 2008В R2 with ServiceВ PackВ 1 (SP1)

Windows Server 2012

Windows Server 2012 R2

Migrations are supported between different editions of the operating system (for example, from Windows Server Enterprise to Windows Server Datacenter), between x86 and x64 processor architectures, and from a cluster running Windows Server Core or the Microsoft Hyper-V ServerВ R2 operating system to a cluster running a full version of Windows Server.

The following migrations scenarios are not supported:

Migrations from Windows ServerВ 2003, Windows ServerВ 2003В R2, or Windows ServerВ 2008 to Windows Server 2012 R2 are not supported. You should first upgrade to Windows ServerВ 2008В R2В SP1 or Windows Server 2012, and then migrate the resources to Windows Server 2012 R2 using the steps in this guide. For information about migrating to a Windows Server 2012 failover cluster, see Migrating Clustered Services and Applications to Windows Server 2012. For information about migrating to a Windows ServerВ 2008В R2 failover cluster, see Migrating Clustered Services and Applications to Windows Server 2008 R2 Step-by-Step Guide.

The Copy Cluster Roles Wizard does not support migrations from a Windows Server 2012 R2 failover cluster to a cluster with an earlier version of Windows Server.

Before you perform a migration, you should install the latest updates for the operating systems on both the old failover cluster and the new failover cluster.

Target audience

This migration guide is designed for cluster administrators who want to migrate their existing clustered roles, on a failover cluster running an earlier version of Windows Server, to a Windows Server 2012 R2 failover cluster. The focus of the guide is the steps required to successfully migrate the clustered roles and resources from one cluster to another by using the Copy Cluster Roles Wizard in Failover Cluster Manager.

General knowledge of how to create a failover cluster, configure storage and networking, and deploy and manage the clustered roles and features is assumed.

It is also assumed that customers who will use the Copy Cluster Roles Wizard to migrate highly available virtual machines have a basic knowledge of how to create, configure, and manage highly available Hyper-V virtual machines.

What this guide does not provide

This guide does not provide instructions for migrating clustered roles by methods other than using the Copy Cluster Roles Wizard.

This guide identifies clustered roles that require special handling before and after a wizard-based migration, but it does not provide detailed instructions for migrating any specific role or feature. To find out requirements and dependencies for migrating a specific Windows Server role or feature, see Migrate Roles and Features to Windows Server 2012 R2.

This guide does not provide detailed instructions for migrating a highly available virtual machine (HAVM) by using the Copy Cluster Roles Wizard. For a full discussion of migration options and requirements for migrating HAVMs to a Windows Server 2012 R2 failover cluster, and step-by-step instructions for performing a migration by using the Copy Cluster Roles Wizard, see Hyper-V: Hyper-V Cluster Migration.

Planning considerations for migrations between failover clusters

As you plan a migration to a failover cluster running Windows Server 2012 R2, consider the following:

For your cluster to be supported by Microsoft, the cluster configuration must pass cluster validation. All hardware used by the cluster should be Windows logo certified. If any of your hardware does not appear in the Windows Server Catalog in hardware certified for Windows Server 2012 R2, contact your hardware vendor to find out their certification timeline.

In addition, the complete configuration (servers, network, and storage) must pass all tests in the Validate a Configuration Wizard, which is included in the Failover Cluster Manager snap-in. For more information, see Validate Hardware for a Failover Cluster.

Hardware requirements are especially important if you plan to continue to use the same servers or storage for the new cluster that the old cluster used. When you plan the migration, you should check with your hardware vendor to ensure that the existing storage meets certification requirements for use with Windows Server 2012 R2. For more information about hardware requirements, see Failover Clustering Hardware Requirements and Storage Options.

The Copy Cluster Roles Wizard assumes that the migrated role or feature will use the same storage that it used on the old cluster. If you plan to migrate to new storage, you must copy or move of data or folders (including shared folder settings) manually. The wizard also does not copy any mount point information used in the old cluster. For information about handling mount points during a migration, see Cluster Migrations Involving New Storage: Mount Points.

Not all clustered services and features can be migrated to a Windows Server 2012 R2 failover cluster by using the Copy Cluster Roles Wizard. To find out which clustered services and applications can be migrated by using the Copy Cluster Roles Wizard, and operating system requirements for the source failover cluster, see Migration Paths for Migrating to a Failover Cluster Running Windows Server 2012 R2.

Migration scenarios that use the Copy Cluster Roles Wizard

When you use the Copy Cluster Roles Wizard for your migration, you can choose from a variety of methods to perform the overall migration. This guide provides step-by-step instructions for the following two methods:

Create a separate failover cluster running Windows ServerВ 2012 and then migrate to that cluster. In this scenario, you migrate from a multi-node cluster running Windows ServerВ 2008В R2, Windows Server 2012, or Windows Server 2012 R2. For more information, see Migrate Between Two Multi-Node Clusters: Migration to Windows Server 2012 R2.

Perform an in-place migration involving only two servers. In this scenario, you start with a two-node cluster that is running Windows ServerВ 2008В R2В SP1 or Windows Server 2012, remove a server from the cluster, and perform a clean installation (not an upgrade) of Windows Server 2012 R2 on that server. You use that server to create a new one-node failover cluster running Windows Server 2012 R2. Then you migrate the clustered services and applications from the old cluster node to the new cluster. Finally, you evict the remaining node from the old cluster, perform a clean installation of Windows Server 2012 R2 and add the Failover Clustering feature to that server, and then add the server to the new failover cluster. For more information, see In-Place Migration for a Two-Node Cluster: Migration to Windows Server 2012 R2.

We recommend that you test your migration in a test lab environment before you migrate a clustered service or application in your production environment. To perform a successful migration, you need to understand the requirements and dependencies of the service or application and the supporting roles and features in Windows Server in addition to the processes that this migration guide describes.

Failover Clustering Overview

Applies To: Windows Server 2012 R2, Windows Server 2012

This topic provides an overview of the Failover Clustering feature in Windows Server 2012 R2 and Windows Server 2012. For info about Failover Clustering in Windows Server 2016, see Failover Clustering in Windows Server 2016.

Failover clusters provide high availability and scalability to many server workloads. These include server applications such as Microsoft Exchange Server, Hyper-V, Microsoft SQL Server, and file servers. The server applications can run on physical servers or virtual machines. This topic describes the Failover Clustering feature and provides links to additional guidance about creating, configuring, and managing failover clusters that can scale to 64 physical nodes and to 8,000 virtual machines.

.jpeg) | Did you know that Microsoft Azure provides similar functionality in the cloud? Learn more about Microsoft Azure virtualization solutions. Create a hybrid virtualization solution in Microsoft Azure: |

Did you mean…

Feature description

A failover cluster is a group of independent computers that work together to increase the availability and scalability of clustered roles (formerly called clustered applications and services). The clustered servers (called nodes) are connected by physical cables and by software. If one or more of the cluster nodes fail, other nodes begin to provide service (a process known as failover). In addition, the clustered roles are proactively monitored to verify that they are working properly. If they are not working, they are restarted or moved to another node. Failover clusters also provide Cluster Shared Volume (CSV) functionality that provides a consistent, distributed namespace that clustered roles can use to access shared storage from all nodes. With the Failover Clustering feature, users experience a minimum of disruptions in service.

You can manage failover clusters by using the Failover Cluster Manager snap-in and the Failover Clustering Windows PowerShell cmdlets. You can also use the tools in File and Storage Services to manage file shares on file server clusters.

Practical applications

Highly available or continuously available file share storage for applications such as Microsoft SQL Server and Hyper-V virtual machines

Highly available clustered roles that run on physical servers or on virtual machines that are installed on servers running Hyper-V

New and changed functionality

New and changed functionality in Failover Clustering supports increased scalability, easier management, faster failover, and more flexible architectures for failover clusters.

For information about Failover Clustering functionality that is new or changed in Windows Server 2012 R2, see What’s New in Failover Clustering.

For information about Failover Clustering functionality that is new or changed in Windows Server 2012, see What’s New in Failover Clustering.

Hardware requirements

A failover cluster solution must meet the following hardware requirements:

Hardware components in the failover cluster solution must meet the qualifications for the Certified for Windows Server 2012 logo.

Storage must be attached to the nodes in the cluster, if the solution is using shared storage.

Device controllers or appropriate adapters for the storage can be Serial Attached SCSI (SAS), Fibre Channel, Fibre Channel over Ethernet (FcoE), or iSCSI.

The complete cluster configuration (servers, network, and storage) must pass all tests in the Validate a Configuration Wizard.

In the network infrastructure that connects your cluster nodes, avoid having single points of failure.

For more information about hardware compatibility, see the Windows Server Catalog.

For more information about the correct configuration of the servers, network, and storage for a failover cluster, see the following topics:

Software requirements

You can use the Failover Clustering feature on the Standard and Datacenter editions of Windows Server 2012 R2 and Windows Server 2012. This includes Server Core installations.

You must follow the hardware manufacturers’ recommendations for firmware updates and software updates. Usually, this means that the latest firmware and software updates have been applied. Occasionally, a manufacturer might recommend specific updates other than the latest updates.

Server Manager information

In Server Manager, use the Add Roles and Features Wizard to add the Failover Clustering feature. The Failover Clustering Tools include the Failover Cluster Manager snap-in, the Failover Clustering Windows PowerShell cmdlets, the Cluster-Aware Updating (CAU) user interface and Windows PowerShell cmdlets, and related tools. For general information about installing features, see Install or Uninstall Roles, Role Services, or Features.

To open Failover Cluster Manager in Server Manager, click Tools, and then click Failover Cluster Manager.

See also

The following table provides additional resources about the Failover Clustering feature in Windows Server 2012 R2 and Windows Server 2012. Additionally, see the content on failover clusters in the Windows Server 2008 R2 Technical Library.

Network Recommendations for a Hyper-V Cluster in Windows Server 2012

Applies To: Windows Server 2012

There are several different types of network traffic that you must consider and plan for when you deploy a highly available Hyper-V solution. You should design your network configuration with the following goals in mind:

To ensure network quality of service

To provide network redundancy

To isolate traffic to defined networks

Where applicable, take advantage of Server Message Block (SMB) Multichannel

This topic provides network configuration recommendations that are specific to a Hyper-V cluster that is running Windows Server 2012. It includes an overview of the different network traffic types, recommendations for how to isolate traffic, recommendations for features such as NIC Teaming, Quality of Service (QoS) and Virtual Machine Queue (VMQ), and a Windows PowerShell script that shows an example of converged networking, where the network traffic on a Hyper-V cluster is routed through one external virtual switch.

Windows Server 2012 supports the concept of converged networking, where different types of network traffic share the same Ethernet network infrastructure. In previous versions of Windows Server, the typical recommendation for a failover cluster was to dedicate separate physical network adapters to different traffic types. Improvements in Windows Server 2012, such as Hyper-V QoS and the ability to add virtual network adapters to the management operating system enable you to consolidate the network traffic on fewer physical adapters. Combined with traffic isolation methods such as VLANs, you can isolate and control the network traffic.

If you use System Center Virtual Machine Manager (VMM) to create or manage Hyper-V clusters, you must use VMM to configure the network settings that are described in this topic.

In this topic:

Overview of different network traffic types

When you deploy a Hyper-V cluster, you must plan for several types of network traffic. The following table summarizes the different traffic types.

| Network Traffic Type | Description |

|---|---|

| Management | — Provides connectivity between the server that is running Hyper-V and basic infrastructure functionality. — Used to manage the Hyper-V management operating system and virtual machines. |

| Cluster | — Used for inter-node cluster communication such as the cluster heartbeat and Cluster Shared Volumes (CSV) redirection. |

| Live migration | — Used for virtual machine live migration. |

| Storage | — Used for SMB traffic or for iSCSI traffic. |

| Replica traffic | — Used for virtual machine replication through the Hyper-V Replica feature. |

| Virtual machine access | — Used for virtual machine connectivity. — Typically requires external network connectivity to service client requests. |

The following sections provide more detailed information about each network traffic type.

Management traffic

A management network provides connectivity between the operating system of the physical Hyper-V host (also known as the management operating system) and basic infrastructure functionality such as Active Directory Domain Services (AD DS), Domain Name System (DNS), and Windows Server Update Services (WSUS). It is also used for management of the server that is running Hyper-V and the virtual machines.

The management network must have connectivity between all required infrastructure, and to any location from which you want to manage the server.

Cluster traffic

A failover cluster monitors and communicates the cluster state between all members of the cluster. This communication is very important to maintain cluster health. If a cluster node does not communicate a regular health check (known as the cluster heartbeat), the cluster considers the node down and removes the node from cluster membership. The cluster then transfers the workload to another cluster node.

Inter-node cluster communication also includes traffic that is associated with CSV. For CSV, where all nodes of a cluster can access shared block-level storage simultaneously, the nodes in the cluster must communicate to orchestrate storage-related activities. Also, if a cluster node loses its direct connection to the underlying CSV storage, CSV has resiliency features which redirect the storage I/O over the network to another cluster node that can access the storage.

Live migration traffic

Live migration enables the transparent movement of running virtual machines from one Hyper-V host to another without a dropped network connection or perceived downtime.

We recommend that you use a dedicated network or VLAN for live migration traffic to ensure quality of service and for traffic isolation and security. Live migration traffic can saturate network links. This can cause other traffic to experience increased latency. The time it takes to fully migrate one or more virtual machines depends on the throughput of the live migration network. Therefore, you must ensure that you configure the appropriate quality of service for this traffic. To provide the best performance, live migration traffic is not encrypted.

You can designate multiple networks as live migration networks in a prioritized list. For example, you may have one migration network for cluster nodes in the same cluster that is fast (10 GB), and a second migration network for cross-cluster migrations that is slower (1 GB).

All Hyper-V hosts that can initiate or receive a live migration must have connectivity to a network that is configured to allow live migrations. Because live migration can occur between nodes in the same cluster, between nodes in different clusters, and between a cluster and a stand-alone Hyper-V host, make sure that all these servers can access a live migration-enabled network.

Storage traffic

For a virtual machine to be highly available, all members of the Hyper-V cluster must be able to access the virtual machine state. This includes the configuration state and the virtual hard disks. To meet this requirement, you must have shared storage.

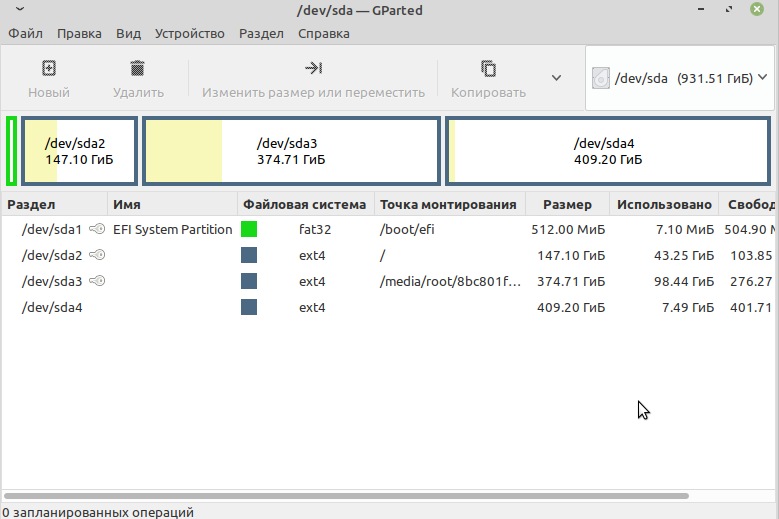

In Windows Server 2012, there are two ways that you can provide shared storage:

Shared block storage. Shared block storage options include Fibre Channel, Fibre Channel over Ethernet (FCoE), iSCSI, and shared Serial Attached SCSI (SAS).

File-based storage. Remote file-based storage is provided through SMB 3.0.

SMB 3.0 includes new functionality known as SMB Multichannel. SMB Multichannel automatically detects and uses multiple network interfaces to deliver high performance and highly reliable storage connectivity.

By default, SMB Multichannel is enabled, and requires no additional configuration. You should use at least two network adapters of the same type and speed so that SMB Multichannel is in effect. Network adapters that support RDMA (Remote Direct Memory Access) are recommended but not required.

SMB 3.0 also automatically discovers and takes advantage of available hardware offloads, such as RDMA. A feature known as SMB Direct supports the use of network adapters that have RDMA capability. SMB Direct provides the best performance possible while also reducing file server and client overhead.

The NIC Teaming feature is incompatible with RDMA-capable network adapters. Therefore, if you intend to use the RDMA capabilities of the network adapter, do not team those adapters.

Both iSCSI and SMB use the network to connect the storage to cluster members. Because reliable storage connectivity and performance is very important for Hyper-V virtual machines, we recommend that you use multiple networks (physical or logical) to ensure that these requirements are achieved.

Replica traffic

Hyper-V Replica provides asynchronous replication of Hyper-V virtual machines between two hosting servers or Hyper-V clusters. Replica traffic occurs between the primary and Replica sites.

Hyper-V Replica automatically discovers and uses available network interfaces to transmit replication traffic. To throttle and control the replica traffic bandwidth, you can define QoS policies with minimum bandwidth weight.

If you use certificate-based authentication, Hyper-V Replica encrypts the traffic. If you use Kerberos-based authentication, traffic is not encrypted.

Virtual machine access traffic

Most virtual machines require some form of network or Internet connectivity. For example, workloads that are running on virtual machines typically require external network connectivity to service client requests. This can include tenant access in a hosted cloud implementation. Because multiple subclasses of traffic may exist, such as traffic that is internal to the datacenter and traffic that is external (for example to a computer outside the datacenter or to the Internet); one or more networks are required for these virtual machines to communicate.

To separate virtual machine traffic from the management operating system, we recommend that you use VLANs which are not exposed to the management operating system.

How to isolate the network traffic on a Hyper-V cluster

To provide the most consistent performance and functionality, and to improve network security, we recommend that you isolate the different types of network traffic.

Realize that if you want to have a physical or logical network that is dedicated to a specific traffic type, you must assign each physical or virtual network adapter to a unique subnet. For each cluster node, Failover Clustering recognizes only one IP address per subnet.

Isolate traffic on the management network

We recommend that you use a firewall or IPsec encryption, or both, to isolate management traffic. In addition, you can use auditing to ensure that only defined and allowed communication is transmitted through the management network.

Isolate traffic on the cluster network

To isolate inter-node cluster traffic, you can configure a network to either allow cluster network communication or not to allow cluster network communication. For a network that allows cluster network communication, you can also configure whether to allow clients to connect through the network. (This includes client and management operating system access.)

A failover cluster can use any network that allows cluster network communication for cluster monitoring, state communication, and for CSV-related communication.

To configure a network to allow or not to allow cluster network communication, you can use Failover Cluster Manager or Windows PowerShell. To use Failover Cluster Manager, click Networks in the navigation tree. In the Networks pane, right-click a network, and then click Properties.

Figure 1. Failover Cluster Manager network properties

The following Windows PowerShell example configures a network named Management Network to allow cluster and client connectivity.

The Role property has the following possible values.

| Value | Network Setting |

|---|---|

| 0 | Do not allow cluster network communication |

| 1 | Allow cluster network communication only |

| 3 | Allow cluster network communication and client connectivity |

The following table shows the recommended settings for each type of network traffic. Realize that virtual machine access traffic is not listed because these networks should be isolated from the management operating system by using VLANs that are not exposed to the host. Therefore, virtual machine networks should not appear in Failover Cluster Manager as cluster networks.

| Network Type | Recommended Setting |

|---|---|

| Management | Both of the following: — Allow cluster network communication on this network |

| Cluster | Allow cluster network communication on this network Note: Clear the Allow clients to connect through this network check box. |

| Live migration | Allow cluster network communication on this network Note: Clear the Allow clients to connect through this network check box. |

| Storage | Do not allow cluster network communication on this network |

| Replica traffic | Both of the following: — Allow cluster network communication on this network |

Isolate traffic on the live migration network

By default, live migration traffic uses the cluster network topology to discover available networks and to establish priority. However, you can manually configure live migration preferences to isolate live migration traffic to only the networks that you define. To do this, you can use Failover Cluster Manager or Windows PowerShell. To use Failover Cluster Manager, in the navigation tree, right-click Networks, and then click Live Migration Settings.

Figure 2. Live migration settings in Failover Cluster Manager

The following Windows PowerShell example enables live migration traffic only on a network that is named Migration_Network.

Isolate traffic on the storage network

To isolate SMB storage traffic, you can use Windows PowerShell to set SMB Multichannel constraints. SMB Multichannel constraints restrict SMB communication between a given file server and the Hyper-V host to one or more defined network interfaces.

For example, the following Windows PowerShell command sets a constraint for SMB traffic from the file server FileServer1 to the network interfaces SMB1, SMB2, SMB3, and SMB4 on the Hyper-V host from which you run this command.

To isolate iSCSI traffic, configure the iSCSI target with interfaces on a dedicated network (logical or physical). Use the corresponding interfaces on the cluster nodes when you configure the iSCSI initiator.

Isolate traffic for replication

To isolate Hyper-V Replica traffic, we recommend that you use a different subnet for the primary and Replica sites.

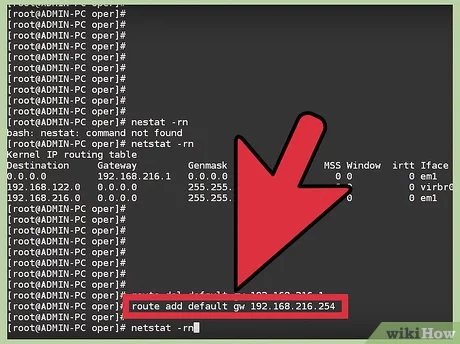

If you want to isolate the replica traffic to a particular network adapter, you can define a persistent static route which redirects the network traffic to the defined network adapter. To specify a static route, use the following command:

route add mask if -p

For example, to add a static route to the 10.1.17.0 network (example network of the Replica site) that uses a subnet mask of 255.255.255.0, a gateway of 10.0.17.1 (example IP address of the primary site), where the interface number for the adapter that you want to dedicate to replica traffic is 8, run the following command:

route add 10.1.17.1 mask 255.255.255.0 10.0.17.1 if 8 -p

NIC Teaming (LBFO) recommendations

We recommend that you team physical network adapters in the management operating system. This provides bandwidth aggregation and network traffic failover if a network hardware failure or outage occurs.

The NIC Teaming feature, also known as load balancing and failover (LBFO), provides two basic sets of algorithms for teaming.

Switch-dependent modes. Requires the switch to participate in the teaming process. Typically requires all the network adapters in the team to be connected to the same switch.

Switch-independent modes. Does not require the switch to participate in the teaming process. Although not required, team network adapters can be connected to different switches.

Both modes provide for bandwidth aggregation and traffic failover if a network adapter failure or network disconnection occurs. However, in most cases only switch-independent teaming provides traffic failover for a switch failure.

NIC Teaming also provides a traffic distribution algorithm that is optimized for Hyper-V workloads. This algorithm is referred to as the Hyper-V port load balancing mode. This mode distributes the traffic based on the MAC address of the virtual network adapters. The algorithm uses round robin as the load-balancing mechanism. For example, on a server that has two teamed physical network adapters and four virtual network adapters, the first and third virtual network adapter will use the first physical adapter, and the second and fourth virtual network adapter will use the second physical adapter. Hyper-V port mode also enables the use of hardware offloads such as virtual machine queue (VMQ) which reduces CPU overhead for networking operations.

Recommendations

For a clustered Hyper-V deployment, we recommend that you use the following settings when you configure the additional properties of a team.

| Property Name | Recommended Setting |

|---|---|

| Teaming mode | Switch Independent (the default setting) |

| Load balancing mode | Hyper-V Port |

NIC teaming will effectively disable the RDMA capability of the network adapters. If you want to use SMB Direct and the RDMA capability of the network adapters, you should not use NIC Teaming.

For more information about the NIC Teaming modes and how to configure NIC Teaming settings, see Windows Server 2012 NIC Teaming (LBFO) Deployment and Management and NIC Teaming Overview.

Quality of Service (QoS) recommendations

You can use QoS technologies that are available in Windows Server 2012 to meet the service requirements of a workload or an application. QoS provides the following:

Measures network bandwidth, detects changing network conditions (such as congestion or availability of bandwidth), and prioritizes — or throttles — network traffic.

Enables you to converge multiple types of network traffic on a single adapter.

Includes a minimum bandwidth feature which guarantees a certain amount of bandwidth to a given type of traffic.

We recommend that you configure appropriate Hyper-V QoS on the virtual switch to ensure that network requirements are met for all appropriate types of network traffic on the Hyper-V cluster.

You can use QoS to control outbound traffic, but not the inbound traffic. For example, with Hyper-V Replica, you can use QoS to control outbound traffic (from the primary server), but not the inbound traffic (from the Replica server).

Recommendations

For a Hyper-V cluster, we recommend that you configure Hyper-V QoS that applies to the virtual switch. When you configure QoS, do the following:

Configure minimum bandwidth in weight mode instead of in bits per second. Minimum bandwidth specified by weight is more flexible and it is compatible with other features, such as live migration and NIC Teaming. For more information, see the MinimumBandwidthMode parameter in New-VMSwitch.

Enable and configure QoS for all virtual network adapters. Assign a weight to all virtual adapters. For more information, see Set-VMNetworkAdapter. To make sure that all virtual adapters have a weight, configure the DefaultFlowMinimumBandwidthWeight parameter on the virtual switch to a reasonable value. For more information, see Set-VMSwitch.

The following table recommends some generic weight values. You can assign a value from 1 to 100. For guidelines to consider when you assign weight values, see Guidelines for using Minimum Bandwidth.

| Network Classification | Weight |

|---|---|

| Default weight | 0 |

| Virtual machine access | 1, 3 or 5 (low, medium and high-throughput virtual machines) |

| Cluster | 10 |

| Management | 10 |

| Replica traffic | 10 |

| Live migration | 40 |

| Storage | 40 |

Virtual machine queue (VMQ) recommendations

Virtual machine queue (VMQ) is a feature that is available to computers that have VMQ-capable network hardware. VMQ uses hardware packet filtering to deliver packet data from an external virtual network directly to virtual network adapters. This reduces the overhead of routing packets. When VMQ is enabled, a dedicated queue is established on the physical network adapter for each virtual network adapter that has requested a queue.

Not all physical network adapters support VMQ. Those that do support VMQ will have a fixed number of queues available, and the number will vary. To determine whether a network adapter supports VMQ, and how many queues they support, use the Get-NetAdapterVmq cmdlet.

You can assign virtual machine queues to any virtual network adapter. This includes virtual network adapters that are exposed to the management operating system. Queues are assigned according to a weight value, in a first-come first-serve manner. By default, all virtual adapters have a weight of 100.

Recommendations

We recommend that you increase the VMQ weight for interfaces with heavy inbound traffic, such as storage and live migration networks. To do this, use the Set-VMNetworkAdapter Windows PowerShell cmdlet.

Example of converged networking: routing traffic through one Hyper-V virtual switch

The following Windows PowerShell script shows an example of how you can route traffic on a Hyper-V cluster through one Hyper-V external virtual switch. The example uses two physical 10 GB network adapters that are teamed by using the NIC Teaming feature. The script configures a Hyper-V cluster node with a management interface, a live migration interface, a cluster interface, and four SMB interfaces. After the script, there is more information about how to add an interface for Hyper-V Replica traffic. The following diagram shows the example network configuration.

Figure 3. Example Hyper-V cluster network configuration

The example also configures network isolation which restricts cluster traffic from the management interface, restricts SMB traffic to the SMB interfaces, and restricts live migration traffic to the live migration interface.

Hyper-V Replica considerations

If you also use Hyper-V Replica in your environment, you can add another virtual network adapter to the management operating system for replica traffic. For example:

If you are instead using policy-based QoS, where you can throttle outgoing traffic regardless of the interface on which it is sent, you can use either of the following methods to throttle Hyper-V Replica traffic: Create a QoS policy that is based on the destination port. In the following example, the network listener on the Replica server or cluster has been configured to use port 8080 to receive replication traffic.

Appendix: Encryption

Cluster traffic

By default, cluster communication is not encrypted. You can enable encryption if you want. However, realize that there is performance overhead that is associated with encryption. To enable encryption, you can use the following Windows PowerShell command to set the security level for the cluster.

The following table shows the different security level values.

| Security Description | Value |

|---|---|

| Clear text | 0 |

| Signed (default) | 1 |

| Encrypted | 2 |

Live migration traffic

Live migration traffic is not encrypted. You can enable IPsec or other network layer encryption technologies if you want. However, realize that encryption technologies typically affect performance.

SMB traffic

By default, SMB traffic is not encrypted. Therefore, we recommend that you use a dedicated network (physical or logical) or use encryption. For SMB traffic, you can use SMB encryption, layer-2 or layer-3 encryption. SMB encryption is the preferred method.

Replica traffic

If you use Kerberos-based authentication, Hyper-V Replica traffic is not encrypted. We strongly recommend that you encrypt replication traffic that transits public networks over the WAN or the Internet. We recommend Secure Sockets Layer (SSL) encryption as the encryption method. You can also use IPsec. However, realize that using IPsec may significantly affect performance.

.jpeg)

.jpeg)

.jpeg)