- Apache spark install linux

- Latest Preview Release

- Link with Spark

- Installing with PyPi

- Release Notes for Stable Releases

- Archived Releases

- How to Install and Setup Apache Spark on Ubuntu/Debian

- Install Java and Scala in Ubuntu

- Install Apache Spark in Ubuntu

- Configure Environmental Variables for Spark

- Start Apache Spark in Ubuntu

- If You Appreciate What We Do Here On TecMint, You Should Consider:

- Как установить Apache Spark в Ubuntu 20.04 LTS

- Как установить Apache Spark в Ubuntu 20.04 LTS

- Установите Apache Spark на Ubuntu 20.04 LTS Focal Fossa

- Шаг 1. Во-первых, убедитесь, что все ваши системные пакеты обновлены, выполнив следующие apt команды в терминале.

- Шаг 2. Установка Java.

- Шаг 3. Загрузите и установите Apache Spark.

- Шаг 4. Запустите автономный главный сервер Spark.

- Spark Installation on Linux Ubuntu

- Prerequisites:

- Java Installation On Ubuntu

- Python Installation On Ubuntu

- Apache Spark Installation on Ubuntu

- Spark Environment Variables

- Test Spark Installation on Ubuntu

- Spark Shell

- Spark Web UI

- Spark History server

- Conclusion

Apache spark install linux

Choose a Spark release:

Choose a package type:

Verify this release using the and project release KEYS.

Note that, Spark 2.x is pre-built with Scala 2.11 except version 2.4.2, which is pre-built with Scala 2.12. Spark 3.0+ is pre-built with Scala 2.12.

Latest Preview Release

Preview releases, as the name suggests, are releases for previewing upcoming features. Unlike nightly packages, preview releases have been audited by the project’s management committee to satisfy the legal requirements of Apache Software Foundation’s release policy. Preview releases are not meant to be functional, i.e. they can and highly likely will contain critical bugs or documentation errors. The latest preview release is Spark 3.0.0-preview2, published on Dec 23, 2019.

Link with Spark

Spark artifacts are hosted in Maven Central. You can add a Maven dependency with the following coordinates:

Installing with PyPi

PySpark is now available in pypi. To install just run pip install pyspark .

Release Notes for Stable Releases

Archived Releases

As new Spark releases come out for each development stream, previous ones will be archived, but they are still available at Spark release archives.

NOTE: Previous releases of Spark may be affected by security issues. Please consult the Security page for a list of known issues that may affect the version you download before deciding to use it.

Источник

How to Install and Setup Apache Spark on Ubuntu/Debian

Apache Spark is an open-source distributed computational framework that is created to provide faster computational results. It is an in-memory computational engine, meaning the data will be processed in memory.

Spark supports various APIs for streaming, graph processing, SQL, MLLib. It also supports Java, Python, Scala, and R as the preferred languages. Spark is mostly installed in Hadoop clusters but you can also install and configure spark in standalone mode.

In this article, we will be seeing how to install Apache Spark in Debian and Ubuntu-based distributions.

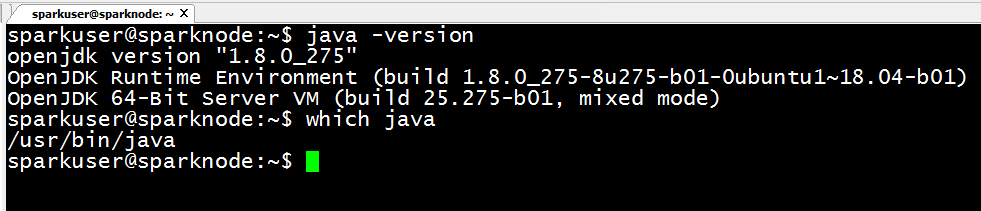

Install Java and Scala in Ubuntu

To install Apache Spark in Ubuntu, you need to have Java and Scala installed on your machine. Most of the modern distributions come with Java installed by default and you can verify it using the following command.

If no output, you can install Java using our article on how to install Java on Ubuntu or simply run the following commands to install Java on Ubuntu and Debian-based distributions.

Next, you can install Scala from the apt repository by running the following commands to search for scala and install it.

To verify the installation of Scala, run the following command.

Install Apache Spark in Ubuntu

Now go to the official Apache Spark download page and grab the latest version (i.e. 3.1.1) at the time of writing this article. Alternatively, you can use the wget command to download the file directly in the terminal.

Now open your terminal and switch to where your downloaded file is placed and run the following command to extract the Apache Spark tar file.

Finally, move the extracted Spark directory to /opt directory.

Configure Environmental Variables for Spark

Now you have to set a few environmental variables in your .profile file before starting up the spark.

To make sure that these new environment variables are reachable within the shell and available to Apache Spark, it is also mandatory to run the following command to take recent changes into effect.

All the spark-related binaries to start and stop the services are under the sbin folder.

Start Apache Spark in Ubuntu

Run the following command to start the Spark master service and slave service.

Once the service is started go to the browser and type the following URL access spark page. From the page, you can see my master and slave service is started.

You can also check if spark-shell works fine by launching the spark-shell command.

That’s it for this article. We will catch you with another interesting article very soon.

If You Appreciate What We Do Here On TecMint, You Should Consider:

TecMint is the fastest growing and most trusted community site for any kind of Linux Articles, Guides and Books on the web. Millions of people visit TecMint! to search or browse the thousands of published articles available FREELY to all.

If you like what you are reading, please consider buying us a coffee ( or 2 ) as a token of appreciation.

We are thankful for your never ending support.

Источник

Как установить Apache Spark в Ubuntu 20.04 LTS

Как установить Apache Spark в Ubuntu 20.04 LTS

В этом руководстве мы покажем вам, как установить Apache Spark в Ubuntu 20.04 LTS. Для тех из вас, кто не знал, Apache Spark — это быстрая и универсальная кластерная вычислительная система. Он предоставляет высокоуровневые API-интерфейсы на Java, Scala и Python, а также оптимизированный движок, поддерживающий общие диаграммы выполнения. Он также поддерживает богатый набор инструментов более высокого уровня, включая Spark SQL для SQL и обработки структурированной информации, MLlib для машинного обучения, GraphX для обработки графиков и Spark Streaming.

В этой статье предполагается, что вы имеете хотя бы базовые знания Linux, знаете, как использовать оболочку, и, что наиболее важно, размещаете свой сайт на собственном VPS. Установка довольно проста и предполагает, что вы работаете с учетной записью root, в противном случае вам может потребоваться добавить ‘ sudo ‘ к командам для получения привилегий root. Я покажу вам пошаговую установку Apache Spark на сервере 20.04 LTS (Focal Fossa). Вы можете следовать тем же инструкциям для Ubuntu 18.04, 16.04 и любого другого дистрибутива на основе Debian, например Linux Mint.

Установите Apache Spark на Ubuntu 20.04 LTS Focal Fossa

Шаг 1. Во-первых, убедитесь, что все ваши системные пакеты обновлены, выполнив следующие apt команды в терминале.

Шаг 2. Установка Java.

Apache Spark требует для запуска Java , давайте убедимся, что Java установлена в нашей системе Ubuntu:

Мы проверяем версию Java с помощью командной строки ниже:

Шаг 3. Загрузите и установите Apache Spark.

Загрузите последнюю версию Apache Spark со страницы загрузок :

Затем настраиваем Apache Spark Environment:

Затем добавьте эти строки в конец файла .bashrc, чтобы путь мог содержать путь к исполняемому файлу Spark:

Шаг 4. Запустите автономный главный сервер Spark.

Теперь, когда вы завершили настройку своей среды для Spark, вы можете запустить главный сервер:

Чтобы просмотреть пользовательский интерфейс Spark Web, откройте веб-браузер и введите IP-адрес localhost на порту 8080:

В этой автономной установке с одним сервером мы запустим один подчиненный сервер вместе с главным сервером. Команда используется для запуска рабочего процесса Spark: start — slave . sh

Теперь, когда воркер запущен и работает, если вы перезагрузите веб-интерфейс Spark Master, вы должны увидеть его в списке:

После этого завершите настройку и запустите главный и подчиненный сервер, проверьте, работает ли оболочка Spark:

Поздравляю! Вы успешно установили Apache Spark . Благодарим за использование этого руководства по установке Apache Spark в системе Ubuntu 20.04 (Focal Fossa). Для получения дополнительной помощи или полезной информации мы рекомендуем вам посетить официальный сайт Apache Spark .

Источник

Spark Installation on Linux Ubuntu

Let’s learn how to do Apache Spark Installation on Linux based Ubuntu server, same steps can be used to setup Centos, Debian e.t.c. In real-time all Spark application runs on Linux based OS hence it is good to have knowledge on how to Install and run Spark applications on some Unix based OS like Ubuntu server.

Though this article explains with Ubuntu, you can follow these steps to install Spark on any Linux-based OS like Centos, Debian e.t.c, I followed the below steps to setup my Apache Spark cluster on Ubuntu server.

Prerequisites:

- Ubuntu Server running

- Root access to Ubuntu server

- If you wanted to install Apache Spark on Hadoop & Yarn installation, please Install and Setup Hadoop cluster and setup Yarn on Cluster before proceeding with this article.

If you just wanted to run Spark in standalone, proceed with this article.

Java Installation On Ubuntu

Apache Spark is written in Scala which is a language of Java hence to run Spark you need to have Java Installed. Since Oracle Java is licensed here I am using openJDK Java. If you wanted to use Java from other vendors or Oracle please do so. Here I will be using JDK 8.

Post JDK install, check if it installed successfully by running java -version

Python Installation On Ubuntu

You can skip this section if you wanted to run Spark with Scala & Java on an Ubuntu server.

Python Installation is needed if you wanted to run PySpark examples (Spark with Python) on the Ubuntu server.

Apache Spark Installation on Ubuntu

In order to install Apache Spark on Linux based Ubuntu, access Apache Spark Download site and go to the Download Apache Spark section and click on the link from point 3, this takes you to the page with mirror URL’s to download. copy the link from one of the mirror site.

If you wanted to use a different version of Spark & Hadoop, select the one you wanted from the drop-down (point 1 and 2); the link on point 3 changes to the selected version and provides you with an updated link to download.

Use wget command to download the Apache Spark to your Ubuntu server.

Once your download is complete, untar the archive file contents using tar command, tar is a file archiving tool. Once untar complete, rename the folder to spark.

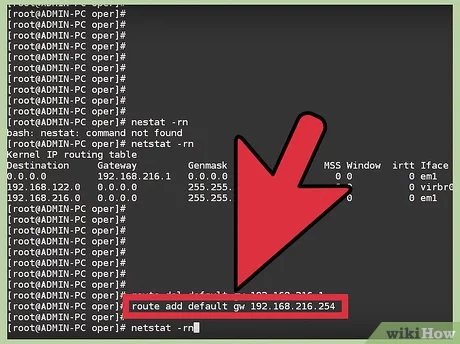

Spark Environment Variables

Add Apache Spark environment variables to .bashrc or .profile file. open file in vi editor and add below variables.

Now load the environment variables to the opened session by running below command

In case if you added to .profile file then restart your session by closing and re-opening the session.

Test Spark Installation on Ubuntu

With this, Apache Spark Installation on Linux Ubuntu completes. Now let’s run a sample example that comes with Spark binary distribution.

Here I will be using Spark-Submit Command to calculate PI value for 10 places by running org.apache.spark.examples.SparkPi example. You can find spark-submit at $SPARK_HOME/bin directory.

Spark Shell

Apache Spark binary comes with an interactive spark-shell. In order to start a shell to use Scala language, go to your $SPARK_HOME/bin directory and type “ spark-shell “. This command loads the Spark and displays what version of Spark you are using.

Note: In spark-shell you can run only Spark with Scala. In order to run PySpark, you need to open pyspark shell by running $SPARK_HOME/bin/pyspark . Make sure you have Python installed before running pyspark shell.

By default, spark-shell provides with spark (SparkSession) and sc (SparkContext) object’s to use. Let’s see some examples.

Spark-shell also creates a Spark context web UI and by default, it can access from http://ip-address:4040.

Spark Web UI

Apache Spark provides a suite of Web UIs (Jobs, Stages, Tasks, Storage, Environment, Executors, and SQL) to monitor the status of your Spark application, resource consumption of Spark cluster, and Spark configurations. On Spark Web UI, you can see how the Spark Actions and Transformation operations are executed. You can access by opening http://ip-address:4040/ . replace ip-address with your server IP.

Spark History server

Create $SPARK_HOME/conf/spark-defaults.conf file and add below configurations.

Create Spark Event Log directory. Spark keeps logs for all applications you submitted.

Run $SPARK_HOME/sbin/start-history-server.sh to start history server.

As per the configuration, history server by default runs on 18080 port.

Run PI example again by using spark-submit command, and refresh the History server which should show the recent run.

Conclusion

In Summary, you have learned steps involved in Apache Spark Installation on Linux based Ubuntu Server, and also learned how to start History Server, access web UI.

Источник